Export FBX doubles vertices for Bevel Harden Normals #104526

Labels

No Label

Interest

Animation & Rigging

Interest

Blender Cloud

Interest

Collada

Interest

Core

Interest

Documentation

Interest

Eevee & Viewport

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

Import and Export

Interest

Modeling

Interest

Modifiers

Interest

Nodes & Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds, Tests & Devices

Interest

Python API

Interest

Rendering & Cycles

Interest

Sculpt, Paint & Texture

Interest

Translations

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Meta

Good First Issue

Meta

Papercut

Module

Add-ons (BF-Blender)

Module

Add-ons (Community)

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

3 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender-addons#104526

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

System Information

Operating system: Windows 10

Blender Version

Broken: 3.5.0, Add-on FBX 4.37.5

Broken: 3.6.0, Add-on FBX 5.2.0

Short description of error

Export FBX doubles vertices for Bevel Harden Normals

Exact steps for others to reproduce the error

Open these two FBX files in other program: Unreal Engine, Marmoset Toolbag, Microsoft 3D Viewer.

Look at the number of vertices.

Unnecessary vertices are undesirable for game optimization.

"Harden Normals" creates Sharps, and perhaps "Export FBX" saves two identical normals for each vertex.

I can't reproduce the problem with the steps described.

Even because 24 vertices cannot represent the described geometry.

In this case, 24 would represent the corners of a Cube without the bevel (6 * 4).

48 are the corners of the described and triangulated geometry.

It is probably needed to inform custom normals.

Here is what it looks like.

Files "Cube 1 (3.5.0) - 48 vertices.fbx" and "Cube 2 (3.5.0) - 24 vertices.fbx" from attached zip file.

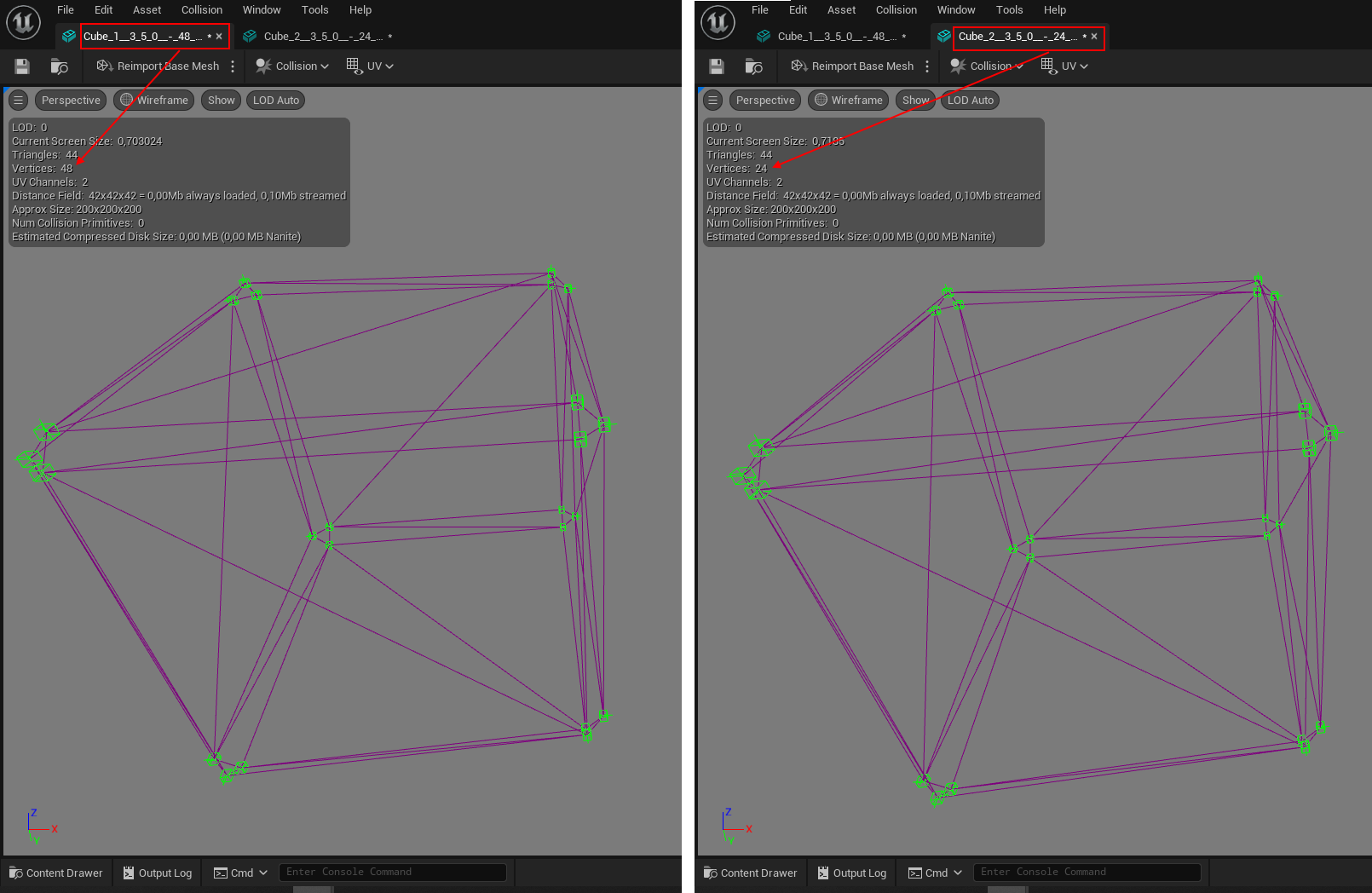

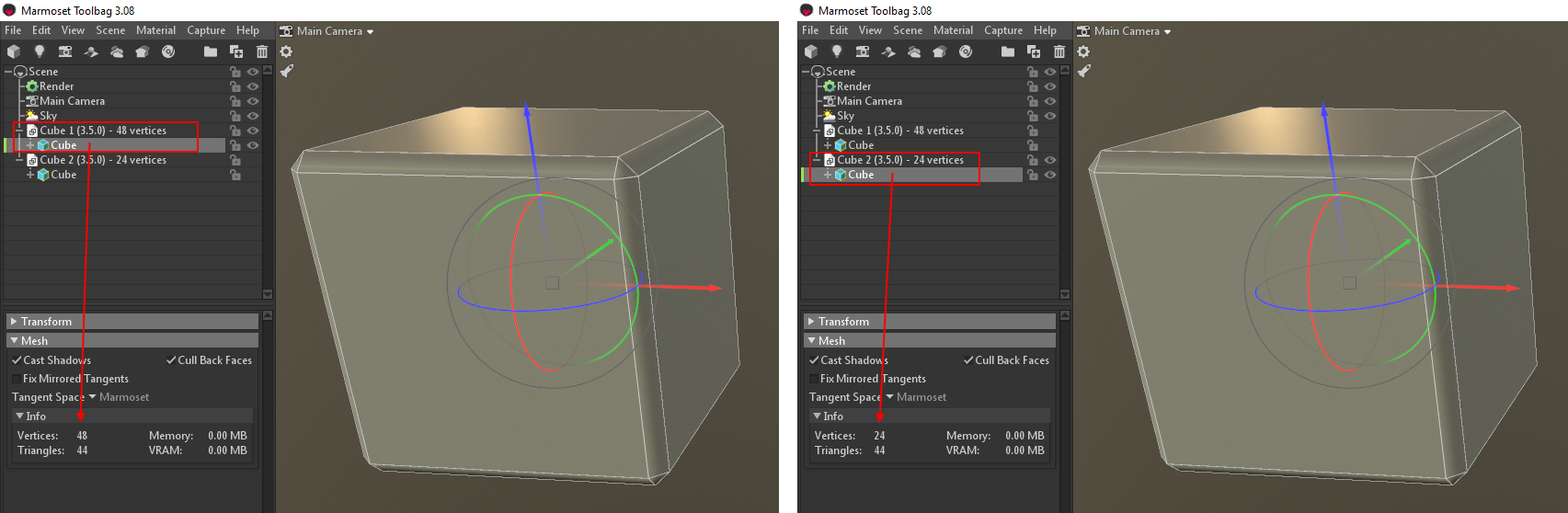

Unreal Engine:

Marmoset Toolbag:

Can you provide the

Cube 1 (3.5.0) - 48 vertices.fbxfile?Here all FBX files: Test Files - Export Cube - Harden Normals.zip

I converted both files to json for parsing and I could see they both report 27 vertices.

They are even the same size.

I haven't found anything that might indicate a problem with Blender.

Attached are the files as JSON in case you want to analyze by yourself.

Perhaps your converter is not working correctly, or doing some kind of optimization.

I used Filestar converter: https://filestar.com/skills/fbx/convert-fbx-to-json

Cube 1 (3.5.0) - 48 vertices - filestar.json

length

meshes.vertices= 144length

meshes.normals= 144144 / 3 = 48

Cube 2 (3.5.0) - 24 vertices - filestar.json

length

meshes.vertices= 72length

meshes.normals= 7272 / 3 = 24

The

fbx2json.pyconverter in theblender\scripts\addons\io_scene_fbxfolder for the FBX addon is pretty much a 1:1 conversion, but that filestar converter seems to be converting to some different 3d model format that happens to be represented as .json.I get 24 vertices for all four of the .fbx files. I ran them through

fbx2json.py, counted the number of elements in the "Vertices" arrays and divided by 3, since there is anx,yandzcomponent for each.The behaviour you are seeing of the number of vertices increasing is consistent with software that can't store normals per-loop (per-polygon-corner) and so must store them per-vertex instead.

What appears to be happening is that Unreal/Marmoset is able to see that in some cases, multiple loops that use the same vertex also have an identical (or close enough to identical) normal, so it doesn't need to duplicate the vertex for these loops.

In your Cube 1 export, the per-loop normals for the large square faces on each side appear to have no floating-point inaccuracy, they're all made up of nice and clean looking

0.0,1.0and-1.0values. The rest of the faces appear to have small inaccuracies, so end up with values like2.142786979675293e-05when they should be0.0or-0.9999999403953552when they should be-1.0etc..When you've re-imported Cube 1 and exported it as Cube 2, you've introduced additional floating-point inaccuracies that have affected most of the clean looking normals.

My suspicion would be that, when Unreal/Marmoset compares the normals to determine if they're the same, it sees

0.0and2.142786979675293e-05and considers them different, but when it sees2.79843807220459e-05and1.4007091522216797e-05it considers them close enough to be the same.0.0can cause problems with comparing similarity of floating point values, particularly in cases where a relative tolerance is used.The 1st loop normal of Cube 1 is

0.0, -1.0, 0.0The 22nd loop normal of Cube 1 is

2.142786979675293e-05, -0.9999999403953552, -4.500150680541992e-06I suspect that Unreal/Marmoset considers these to be different and thus creates an extra vertex.

The 1st loop normal of Cube 2 is

2.79843807220459e-05, -0.9999999403953552, 1.3947486877441406e-05The 22nd loop normal of Cube 2 is

1.4007091522216797e-05, -1.0, -2.8073787689208984e-05I suspect that Unreal/Marmoset considers these to be the same so discards one of the normals instead of creating a new vertex.

There are a total of 24 loop normals for all of the large square faces (one for each corner of each face) all of which contain at least one component that is

0.0in Cube 1. Unreal/Marmoset produces 24 extra vertices for Cube 1, which would match up with what I'm suspecting.Here is a simpler example:

Files: Test Plane - Harden Normals.zip

Something is wrong with the "Bevel - Harden Normals":

Marmoset/Unreal are creating additional vertices upon importing the .fbx that do not exist in the .fbx itself. This is the expected behaviour for a lot of software when they see split normals.

You can import the .fbx straight back into Blender to see that the number of vertices is not increased from when the mesh was exported. Unity also imports all of the original

Cube 1andCube 2.fbx with 24 vertices as expected.I've attached the original

Test Export Cube - Harden Normals.blendbut where the cube has been rotated slightly, along with the exported .fbx. Try importing that rotated cube into Unreal/Marmoset, I suspect you will only get 24 vertices because none of the xyz components of the normals will be exactly0.0. If so, I suspect this is a bug with Unreal and Marmoset Toolbag being unable to properly account for0.0when comparing similarity of normals (since Unity has no problems).Possibly, there could also be an issue in the Bevel Modifier that is incurring additional floating-point inaccuracy for some faces or possibly in the

Mesh.calc_normals_split()function that is used to calculate the split (per-loop) normals that are then exported. But I wouldn't say there is anything that the FBX exporter can do about this.Since the original problem is actually the way other software handles the normals in corners, I am closing the report.

Problems with float precision in modifiers are not considered a bug, it's more of an improvement request.

It is understandable that

Mark Sharpis disregarded by FBX when normals are defined explicitly. The Sharp mark is used to split normals or by the modifiers.If you think you found a bug, please submit a new report and carefully follow the instructions. Be sure to provide a .blend file with exact steps to reproduce the problem.