How to handle difference in sinf/cosf for M1 #107829

Labels

No Label

Interest

Alembic

Interest

Animation & Rigging

Interest

Asset Browser

Interest

Asset Browser Project Overview

Interest

Audio

Interest

Automated Testing

Interest

Blender Asset Bundle

Interest

BlendFile

Interest

Collada

Interest

Compatibility

Interest

Compositing

Interest

Core

Interest

Cycles

Interest

Dependency Graph

Interest

Development Management

Interest

EEVEE

Interest

EEVEE & Viewport

Interest

Freestyle

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

ID Management

Interest

Images & Movies

Interest

Import Export

Interest

Line Art

Interest

Masking

Interest

Metal

Interest

Modeling

Interest

Modifiers

Interest

Motion Tracking

Interest

Nodes & Physics

Interest

OpenGL

Interest

Overlay

Interest

Overrides

Interest

Performance

Interest

Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds & Tests

Interest

Python API

Interest

Render & Cycles

Interest

Render Pipeline

Interest

Sculpt, Paint & Texture

Interest

Text Editor

Interest

Translations

Interest

Triaging

Interest

Undo

Interest

USD

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Interest

Video Sequencer

Interest

Virtual Reality

Interest

Vulkan

Interest

Wayland

Interest

Workbench

Interest: X11

Legacy

Blender 2.8 Project

Legacy

Milestone 1: Basic, Local Asset Browser

Legacy

OpenGL Error

Meta

Good First Issue

Meta

Papercut

Meta

Retrospective

Meta

Security

Module

Animation & Rigging

Module

Core

Module

Development Management

Module

EEVEE & Viewport

Module

Grease Pencil

Module

Modeling

Module

Nodes & Physics

Module

Pipeline, Assets & IO

Module

Platforms, Builds & Tests

Module

Python API

Module

Render & Cycles

Module

Sculpt, Paint & Texture

Module

Triaging

Module

User Interface

Module

VFX & Video

Platform

FreeBSD

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

2 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender#107829

Loading…

Reference in New Issue

No description provided.

Delete Branch "%!s(<nil>)"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

As discovered in #104513 , it appears that the single-precision floating point trig functions are producing slightly different results on apple silicon CPUs (M1, M2) compared with x86_64 CPUs, for certain inputs.

e.g.

This is a design task to help understand this problem, track solutions and mitigations.

See for example,

0eba9e41bfOne thing we should probably do is see if the problem exists on

double precisionas well.For example, take all 4 billion single precision floating point numbers, convert them to double, call cos(x) and sin(x) on them, write to a file.

Compare across CPU architectures.

(We can use python for this, the problem is the CPU, not in the c++ math library)

... assuming they are identical, we can then undefine sinf / cosf, and replace with

... obviously there's a performance hit here, but it may well be small, as long as we store floats as single precision in memory, only promoting the type to call sin/cos

Another thing we should do is ... google search... and see if other projects are seeing this problem

Also, check the other trig function.

atan2f(), asinf(), coshf()etc are probably vulnerable.sqrtf, fmodf, log10fetc are probably safe. (These functions are not transcendental, so the output is completely determined by the rounding mode.)I do not really see why this should be an issue... A float number can only be trusted up to 6 (or 7 in best case) digits, and both results are equals up to their 7th digits.

If an algorithm is sensitive to that level (giving such huge different results caused by such small differences of values), then either this should be documented and accepted as a known limitation of that algorithm, or the algorithm should be revised and made more stable.

Fairly certain that using doubles would not help here, you'd end up facing the same kind of issues, just more rarely probably...

Yeah, it's an odd situation, where the algorithm is choosing the "longest" side of a square.

In theory, both sides should be equal, no problem.

In floating point math we're only comparing the round-off error, and the two different CPUs disagree about what the round-off error is for these particular inputs.

So on some CPUs, the rectangle is "tall" and stays where it is. On others, the rectangle is "wide" and gets rotated 90degrees to stand up.

Again, normally, this wouldn't be a problem as no-one would ever notice, you run the algorithm, compute the rectangle, save the file to disk and move on.

Except that for geometry nodes, the computation is run a second time when the file is loaded. That is, the same .blend file has dramatically different appearance depending on the CPU it's currently being loaded, and is independent of the CPU of the file when it was saved.

As just one example, if you author a .blend file on a Mac, and try and use an intel render farm, some of your textures might be rotated 90 degrees!

Well.. surprisingly, we can actually write a computer program to figure this out and know for sure:

That

yhas 52 bits in the significand, and thexhas 22 bits. So we're2^30less likely to hit the bug. Given there's only2^32single precision floats and not all of them have the problem, it may well be the case that reducing the problem incidence by a factor of2^30does indeed eliminate all of the problems rather than just some of them.We could (i.e "should") write a program to just go and check all 4 billion of them and see what happens...

Can't you simply use a relative epsilon comparison instead of exact equality here?

I think the underlying problem is closer to argmax, so it's something like :

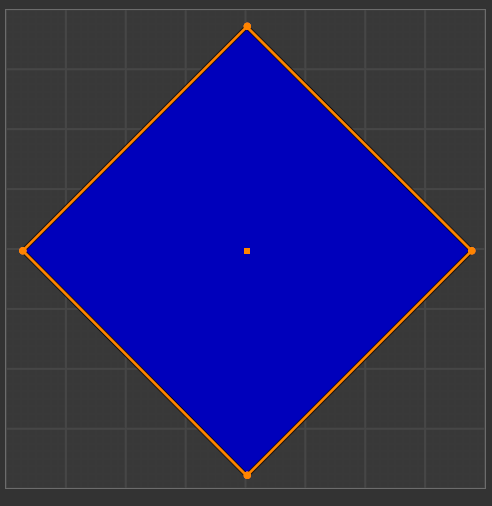

For a more visual example:

To make a "Tall" rectangle, should the diamond turn +45 degrees or -45 degrees?

Just biasing the outputs one way or the other doesn't really solve the underlying problem, which is that the decision is based purely on the round-off error, and the round-off error is different on different CPUs.

I don't understand. I'd rather implement own version of argmax for this, which does relative epsilon comparison, and if several items are 'equal enough', just always e.g. return the first one.

That way you get stable result regardless of 'implementation noise'. In that case, it does not matter to get the 'absolute exact' answer to your question, you just need a way to always get the same answer on all platforms.

(To be specific, use regular argmax, and compare found max value too all previous ones, and return first item within epsilon range of found max value)