Compositor: canvas and transforms #108944

Labels

No Label

Interest

Alembic

Interest

Animation & Rigging

Interest

Asset System

Interest

Audio

Interest

Automated Testing

Interest

Blender Asset Bundle

Interest

BlendFile

Interest

Collada

Interest

Compatibility

Interest

Compositing

Interest

Core

Interest

Cycles

Interest

Dependency Graph

Interest

Development Management

Interest

EEVEE

Interest

EEVEE & Viewport

Interest

Freestyle

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

ID Management

Interest

Images & Movies

Interest

Import Export

Interest

Line Art

Interest

Masking

Interest

Metal

Interest

Modeling

Interest

Modifiers

Interest

Motion Tracking

Interest

Nodes & Physics

Interest

OpenGL

Interest

Overlay

Interest

Overrides

Interest

Performance

Interest

Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds & Tests

Interest

Python API

Interest

Render & Cycles

Interest

Render Pipeline

Interest

Sculpt, Paint & Texture

Interest

Text Editor

Interest

Translations

Interest

Triaging

Interest

Undo

Interest

USD

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Interest

Video Sequencer

Interest

Virtual Reality

Interest

Vulkan

Interest

Wayland

Interest

Workbench

Interest: X11

Legacy

Asset Browser Project

Legacy

Blender 2.8 Project

Legacy

Milestone 1: Basic, Local Asset Browser

Legacy

OpenGL Error

Meta

Good First Issue

Meta

Papercut

Meta

Retrospective

Meta

Security

Module

Animation & Rigging

Module

Core

Module

Development Management

Module

EEVEE & Viewport

Module

Grease Pencil

Module

Modeling

Module

Nodes & Physics

Module

Pipeline, Assets & IO

Module

Platforms, Builds & Tests

Module

Python API

Module

Render & Cycles

Module

Sculpt, Paint & Texture

Module

Triaging

Module

User Interface

Module

VFX & Video

Platform

FreeBSD

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

8 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender#108944

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Motivation

The tiled, full-frame and realtime compositors currently behave differently regarding transforms. See A Comparative Look At Compositor Systems In Blender created by @OmarEmaraDev.

COM_domain.hhin the realtime compositor also has good information on the current workings of that system.We need consistent behavior across CPU and GPU compositing, and decide on the right behavior. Ideally we would like support all of the following:

Proposal

Data and Display Windows

Every image used in compositing is placed on an infinite canvas, and has the following properties:

The data window may be bigger (for overscan) or smaller (for border render) than the display window. See the OpenEXR docs for illustrations.

By making this distinction, transform nodes can translate, rotate and scale images without clipping data that falls outside the display window. Such transforms would change the data window but not the display window.

Combining Images

All images that go into the compositor are placed on the same infinite canvas. Nodes like mix and alpha over by default output a data window that is the union of the two bounding boxes. However there can also be an option to use the intersection, or use the data window from either image.

The display window would be taken from the primary image input.

Realizing Transforms

The realtime compositor has a mechanism where affine transforms and wrapping are not immediately applied after every node. Rather they are stored as part of the image, and realized when an operation is performed that needs it.

This mechanism can remain, but clipping can be avoided by writing to a data window that may be partially or fully outside the display window.

One thing that we found confusing in its current behavior is that the transform is not realized before filter nodes like blur. That means rotating an image 90° and then applying a horizontal blur, the image is blurred vertically in global space. We suggest realizing such transforms before filter nodes. If users want the existing behavior, they can place the blur node before the rotation.

Performance and User Control

While the data window avoids losing data, it can also lead to high memory usage as users may (accidentally) make node setups that occupy many pixels outside the display window.

There are no ideal solutions for this, but we can think of ways to mitigate this:

Resolution Independence

Nodes should generally not use pixel coordinates, but rather coordinates relative to the image size or output size. The reason being that while changing the render resolution percentage could scale the size in pixel coordinates, this would not work when the user changes the render resolution.

Compositing nodes should be evaluated at a known resolution factor, either taken from the render resolution percentage, or computed automatically from the 3D viewport size. Images coming into the compositor should then be scaled and translated appropriately to match.

Viewing and Editing Windows

When using the viewer nodes, there should be a visual indication of what the data and display windows are in the resulting image.

There should also be nodes to modify the display window, and to clip the data window to the display window or non-empty pixels.

Open Questions

CC @Sergey @OmarEmaraDev @Jeroen-Bakker @zazizizou.

Sergey and I reviewed Omar's document and discussed the design. This is my view on things but I'm not so confident on the right design here yet.

I think the way the realtime compositor delays realizing transforms for some types of nodes is unwanted, and we could add user control over this. But then if you have to do that you can be back to the situation where things get clipped when you don't want to. Data windows can provide a solution to that.

A few thoughts after the meeting we had about this today.

Realizing Transforms before Filters

An alternative to always realizing the transform before filter operations like blur would be to have an explicit realize transform node. The effective difference with this proposal would be in the defaults. Either filter operations work in local space by default and to making them work in global space requires adding realize transform node. Or filter options always work in global space and users may have to reorder nodes to get the behavior they want.

Besides a realize transform node, the transform nodes could also have an option to be realized immediately or not.

Rotations could be always realized, but translations and scale could be deferred. Operations like blur could scale the filter size for example, and avoid resolution loss in some cases where immediately realizing would be a problem.

Practical Use Cases

We need some artists feedback and evaluate things in the context of practical use cases. We can try to be clever with regions of interest like the tiled based compositor or deferring realizing transforms, but the complexity these things add is not necessarily helpful. Avoiding unexpectedly high memory usage and resolution loss is a concern, but not the only one.

A few different example scenarios:

Different Types of Transforms

In some cases you want a transform to affect both the display and data window, and in others you want only the data window to be affected.

If the display window of an image is set to the output camera frame, most likely want to only change the data window and keep the camera frame intact. For example if you recorded camera footage with overscan, you can add some camera shake.

On the other hand if you load in an image of e.g. smoke to composite over camera footage, the display window could just be the dimensions of the image itself, in which case you do want to change both the display and data window. On the other hand if the element already includes the display window matching the camera output, this would not be so helpful.

Transform nodes could have an option for this.

An alternative could be that images can either have their own display window, or follow the output camera frame. In the former case the display window would be affected by transforms, in the latter case not. Image input notes then would need some setting to control if they should get the display window from the scene, or keep their own. This may be a difficult concept to explain to users.

The data/display windows are a common concept many canvas compositors are based on. They are usually called region-of-definition (ROD) and region-of-interest (ROI), e.g. in Nuke's documentation or in OpenFX. The latter also covers an algorithm to determine minimum ROD/ROI sizes so that clipping by default can be avoided (which would be preferable). In Nuke the ROD size can also be set by the user, but that is optional.

This paper has some more details.

Yes, in the Nuke user interface it is called Format and Bounding Box, which is what I was alluding to earlier.

The current tiled compositor is actually based on that paper you linked. But the architecture is inefficient on today's CPUs and GPUs, which is the main reason we are switching. I haven't checked to what extent this inefficiency is due to the paper or the Blender implementation. But out-of-core processing and realtime are not compatible with each other, and nowadays there is enough memory to have multiple full resolution images in memory.

We created two test builds in order to have some practical testing grounds for some of the proposals here.

Realized Rotation Build

This build is built from #111213 and can be downloaded from here.

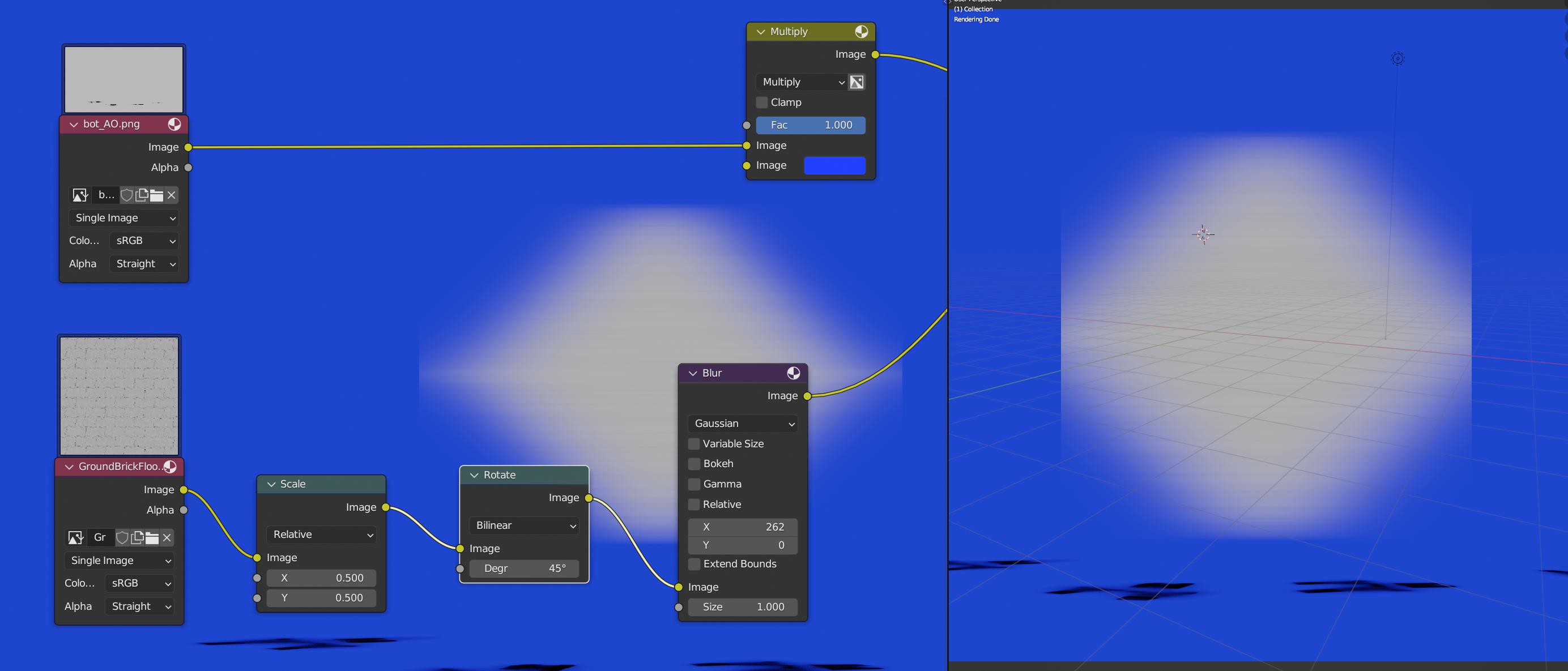

In this first build, we now always realize the rotation of the primary input before executing operations that are not rotation invariant, like most filter operations. This realization takes the form of creating a new image that completely contains the rotated image with transparency everywhere else. This is illustrated in the following example:

Those operations will now operate on their inputs in the rotational global space. For instance, a 45 degree rotated image that is blurred along its x axis will now be blurred along it diagonal, instead of its local x axis. This is illustrated in the following example:

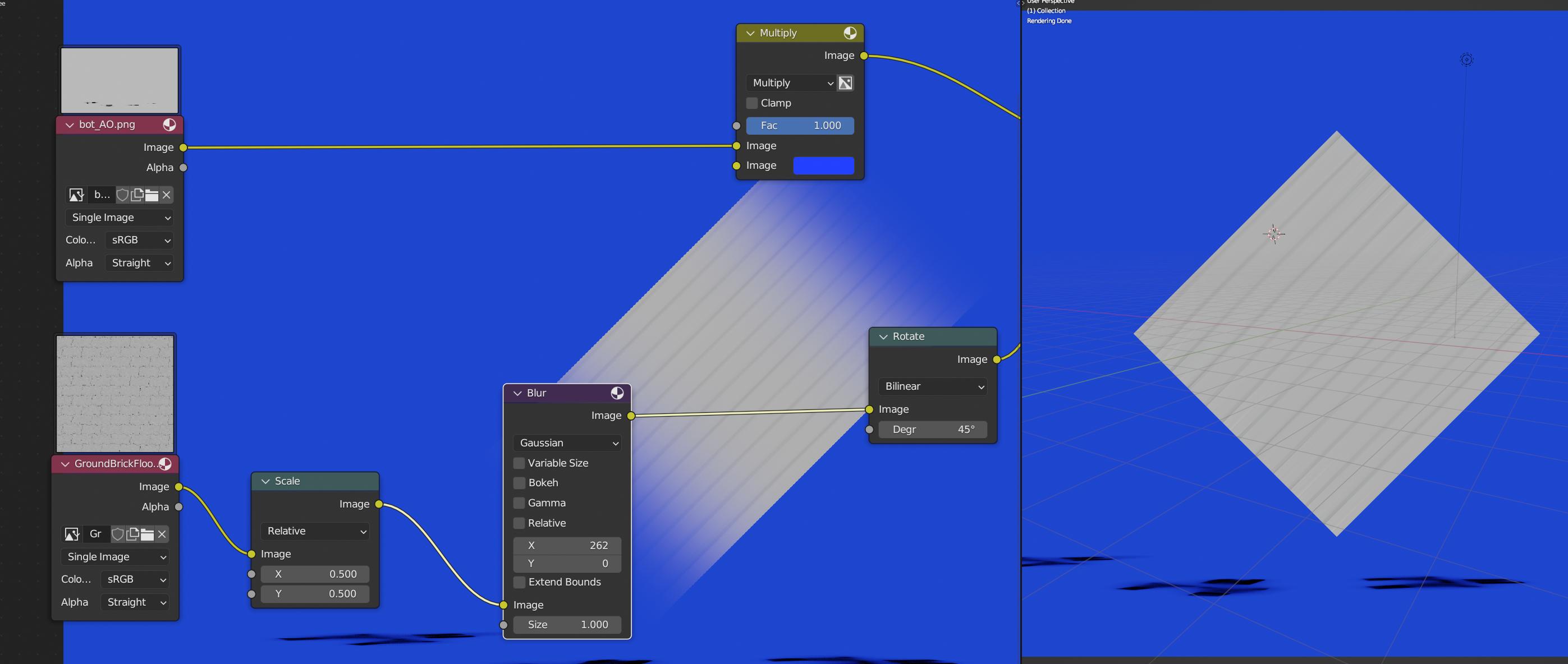

Realized Rotation And Scale Build

This build is built from #111216 and can be downloaded from here.

In this second build, in addition to rotation, we now also always realize the scale of the primary input before executing operations that are not scale invariant, like most filter operations. This realization takes the form of actually increasing or decreasing the resolution of the images based on their scale factor. So for instance, a 512x512 image that is scaled down by a factor of 0.2 will be of resolution 103x103. This is illustrated in the following example:

Automatic realization of translation is probably not something that should be done, so we have't considered it in our tests builds. But if there are concerns regarding translations please bring them up as well.

In our previous discussions, there was a consensus that automatic realization of rotation was necessary, so this change will likely make it to the main build soon enough. If there are objections or concerns, please also bring them up.

Automatic realization of scale is the main point of discussion here due to the concerns and alternatives outlined above, so we would to hear feedback on this with practical examples in its favor or against it.

Here are some notes and concerns on the aforementioned builds.

Deferred Automatic Realization Of Rotation Is Not Side Effect Free

Deferring realization of rotation but at the same time automatically realizing it on non rotation invariant operations would result in the following behavior. Pixel-wise operations that care about transparency and follow the non rotation invariant operations would produce different results had the realization not happened. Because, as mentioned, realizing rotation produces an image with a bigger bounding box that has transparency at the boundary. This examples should clarify this point:

Muting the filter nodes removes the white background, which is probably unexpected to the user. This is because the realization no longer happens, so the transparent areas no longer exist for the Alpha Over node to act on.

As far as I can see, the only solution would be to immediately realize the rotation at the transform nodes and not defer them. Though I have not studied the consequences of this yet.

The Size Of Rotated Images Changes As Rotation Changes

The size of images oscillate between

1xandsqrt(2)xas the user rotates the image. To realize why this might be a problem. Consider the example where a user uses a rotated image as Bokeh weights in the Bokeh Blur node. As the user changes the rotation amount, the size of the blur will apparently oscillate, which might not be clear to the user. This is illustrated in the following example:Notice how the size of the Bokeh blur oscillates as the image rotates. Please ignore the fact that the Bokeh Image node has a rotation option, it is just a demonstration. :)

To me the behavior of the #111216 seems to be the most intuitive on a user level.

As for the issue of "disappearing" white background, intuitively I'd always expect the white background. So, perhaps any socket which is not from a transform node should do realization?

I assume you mean to realize the rotation automatically for all nodes except Transform nodes?

Wouldn't this practically be the same as realizing immediately at the transform node that introduced the rotation? Since it wouldn't really make sense for the user to have two consecutive rotate nodes.

If we can combine all consecutive transform nodes and realize transform only once this sounds like a good idea from the performance perspective. So if we have a chain like

Image -> Scale -> Rotate -> Blur -> Outputideally we'll only perform actual image transform once, which will include scale and rotation in its transform.It makes sense to me to realize before any non-transform node, to avoid those side effects. I still think that from the user point of view evaluation should be from left to right without side effects, and if users wants something else they can reorder the nodes.

For the size changes with bokeh, I think we need an option on transform nodes to control if the size should change or not. To me both behaviors seems useful.

If we add data/display windows there can be an additional option to keep data outside the display window, so that the bokeh node is unaffected by the data window size change and only looks at the display window.

From the perspective from a user, who is not smart enough to fully grasp the concept of deferred realization, it should just be how Brecht described: evaluation from left to right without side-effects. Probably easier said than done :D

It's great that the direction of blur after a rotation node is now correct.

Though rotating an image still behaves weird:

But you probably have that on your agenda anyway, so nevermind. :)

@sebastian_k Are you sure you are using the Realtime Compositor for those tests? Since it shouldn't behave in the way you showed in the second and third images.

@brecht @Sergey Sounds good then. Design-wise, it should be clear. Though technically, realizing before any non-transform node would be problematic because if a rotate node has multiple outgoing links, the exact realization might happen multiple times, so I need to figure out a way to optimize this out.

I just did the same test as @sebastian_k but in the realtime compositor, and yes, the bounding box on the foreground layer doesn't seem to be an issue. But the deferred realization definitely is (which is expected after reading all the above).

Oops, sorry, indeed I was testing the normal compositor. Sorry for that.

Now I tried again in the realtime compositor in the viewport, but still getting strange results.

The blur direction seems to be fine with both of the builds you posted, but a large blur still results in artifacts at the edges.

Strangely blurring first and then rotating works okay in the normal compositor, but not in realtime.

These results are expected, knowing that the realtime compositor just applies one operation after the other to the image left-to-right, while the old compositor reaches backwards in some cases.

For both issues, I think what you need is an option to either enlarge the image based on the blur radius and blur the edges, or keep the image size fixed and the edges sharp.

@brecht @sebastian_k I think this is already implemented by the Extend Bounds option of the Blur node, at least for the first issue.

@OmarEmaraDev ah indeed. It doesn't seem to work correctly with the GPU compositor, the edges get blurred but image size is unchanged.

It seems to work for me, maybe it is something else?

You're right. What I found is different behavior of the Viewer node when using the render compositor, nothing related to blur specifically. For GPU it seems to clip to the render resolution, for Tiled it uses the image as is.

Omar Emara referenced this issue2023-09-13 16:29:55 +02:00

I started looking into making the split viewer node a regular node, see #114245

I do not think the current GPU implementation needs as many changes as this proposal claims.

The

Domainhas a transform and a size that define a rectangle (actually a quad) which I believe is the "data window". The only change toDomainis to add a width/height pair to define thedisplay window. This is a rectangle that is centered on the origin, and the values are floating point.The larger of width or height of

display windowwould define animage unit, which is square in viewing space (not distored by image shape as it is now). Most operators will passdisplay windowthrough unchanged (Scale is an exception).Nodes would figure out an

output domaingiven all their input'sdomains, plus adesired domain(the viewport in most cases). Usually this will be the transform ofdesired domainbut with the "data windows" projected and unioned anddisplay windowcopied from the first input. Some operators can deal with arbitrary, or only affine, or only scale+translate transforms betweenoutput domainanddesired domain. They may also want to crop it.After that the node can transform all it's inputs to

output domain, before doing a calculation. Some nodes can deal with arbitrary transforms, or scale + translate, most can deal with different data windows, so full transform is not always required.This system will fully support overscan and crop, lossless read+write of EXR files, pixel aspect ratio, concatenating transforms, operators that can work on transformed images, and running the composite at a reduced resolution.