Compositor: Procedural texturing, Step 1 #112732

Labels

No Label

Interest

Alembic

Interest

Animation & Rigging

Interest

Asset Browser

Interest

Asset Browser Project Overview

Interest

Audio

Interest

Automated Testing

Interest

Blender Asset Bundle

Interest

BlendFile

Interest

Collada

Interest

Compatibility

Interest

Compositing

Interest

Core

Interest

Cycles

Interest

Dependency Graph

Interest

Development Management

Interest

EEVEE

Interest

EEVEE & Viewport

Interest

Freestyle

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

ID Management

Interest

Images & Movies

Interest

Import Export

Interest

Line Art

Interest

Masking

Interest

Metal

Interest

Modeling

Interest

Modifiers

Interest

Motion Tracking

Interest

Nodes & Physics

Interest

OpenGL

Interest

Overlay

Interest

Overrides

Interest

Performance

Interest

Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds & Tests

Interest

Python API

Interest

Render & Cycles

Interest

Render Pipeline

Interest

Sculpt, Paint & Texture

Interest

Text Editor

Interest

Translations

Interest

Triaging

Interest

Undo

Interest

USD

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Interest

Video Sequencer

Interest

Virtual Reality

Interest

Vulkan

Interest

Wayland

Interest

Workbench

Interest: X11

Legacy

Blender 2.8 Project

Legacy

Milestone 1: Basic, Local Asset Browser

Legacy

OpenGL Error

Meta

Good First Issue

Meta

Papercut

Meta

Retrospective

Meta

Security

Module

Animation & Rigging

Module

Core

Module

Development Management

Module

EEVEE & Viewport

Module

Grease Pencil

Module

Modeling

Module

Nodes & Physics

Module

Pipeline, Assets & IO

Module

Platforms, Builds & Tests

Module

Python API

Module

Render & Cycles

Module

Sculpt, Paint & Texture

Module

Triaging

Module

User Interface

Module

VFX & Video

Platform

FreeBSD

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

6 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender#112732

Loading…

Reference in New Issue

No description provided.

Delete Branch "%!s(<nil>)"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

This is a design task about improvements of procedural texturing in Compositor.

Currently the procedural texturing in compositor is only available via texture nodes which uses very old and limited set of nodes, does not integrate very well with the compositor, and setting it up is rather cumbersome.

One of the important design principles to keep in mind is to consider this project a part of a more global "everything nodes" picture. This means that the changes done in compositor should be aligned with the ideas of having the same sub-set of nodes in all node systems (geometry, shading, compositor, texture nodes systems). That being said, it is not a goal of this specific project to allow sharing node groups across node trees, but more of extending the user functionality of compositor in a way that does not make it harder to achieve better unification. The unification and allowance of node copy-paste and node groups re-usability between different systems is important but it will be done as a separate project.

Fortunately, it does not take that much to heavily improve procedural texturing workflow in Compositor. The immediate focus can be put into adding support for the the following nodes:

Adding support of these two nodes already unlocks huge amount of potential for artists, and it allows to find a good integration between compositor and function nodes.

Texture Coordinate Node

The texture coordinate node outputs a "non-squished" normalized coordinate. The "non-squished" means that converting value from normalized to pixel space gives the same pixel value for X and Y axis. Doing so avoids requirement of an explicit aspect ratio correction in the graph.

In order to achieve the "non-squished" is achieved by normalized the pixel-space coordinates using the largest image dimension. For example, for the Full HD the result of the texture coordinate is within (0, 0) to (1.0, 0.5625).

The most useful notation seems to be to make the node to operate in the local space. In the code terms it means base on the node's

determine_canvas()and itsget_width()/get_height().Noise Texture

The immediate requirement is to re-use the

SH_NODE_TEX_NOISEnode declaration.The integration into the compositor system would need to have some boiler plate code to make it fir into the

NodeandNodeOperation(and the GPU compositor specific functions as well).Re-using existing node type identifiers gives flexibility of doing whatever is needed for the short-term system expansion without locking ourselves into situation when it is much harder to unify the system further.

The easiest is to simply follow the current compositor design and call

noise::perlin_float3_fractal_distortedfrom the node operation'sexecute_pixel_sampled(). However, the belief is that it is possible to create a more generic implementation ofNodeOptiorationwhich will use the node type's multi-function implementation which can then be easily used for any multi-function capable nodes (noise, brick texture, math, etc ...)Open topic is the GPU compositor integration. So far it seems to be easier and more practical to allow some code duplication until the #107073 is handled.

The rest of function nodes

Once the noise texture node is integrated it gives a possibility to add support of the rest of the nodes Magic, Voronoi ... textures.

There are some shader nodes which are actually a super-set of the ones from compositor. For example Math, RGB Mix. They are possible to be used via the multi-functions as well. So the idea is to deprecate the compositor-specific node type and replace it with the shader node types, making these nodes available in all node systems.

Bigger picture thoughts

The points above are mainly inspired by idea of what can we bring to the artists team in a short-term for the Gold project R&D.

On an architectural level the bigger picture thoughts is whether fields needs to become an integral part of compositor. The desired answer to that is that until compositor systems are unified on a user level (Full Frame + GPU) we will not go into bigger redesigns.

There is a discussion in an adjacent task #98940 about similar topics but in a different system.

The proposal seems straightforward, my only objection is on the design of the Texture Coordinates node. I do not think the Texture Coordinates data should exist as part of such a node, nor do I think it should be determined by the inferred canvas.

For the shading system, it is very easy to identify what the output of the Texture Coordinates node is, it is consistent no matter what the upstream node tree is, and the evaluation domain is fixed and solely determined by the surface/volume in question.

On the other hand, for the compositor, if we were to have a similar node with an evaluation domain that is determined by the inferred canvas. It will not be clear what the output is; because it is determined by the upstream node tree, and the evaluation domain could constantly change as the user adjusts the node tree.

The aforementioned design will suffer from the same bad side effects that I demonstrated in "Section 6: Size Inference" of my document linked in #108944. Furthermore, it breaks the "Compositor is evaluated from Left to Right" design, forcing the user to look ahead instead of just working from inputs to outputs.

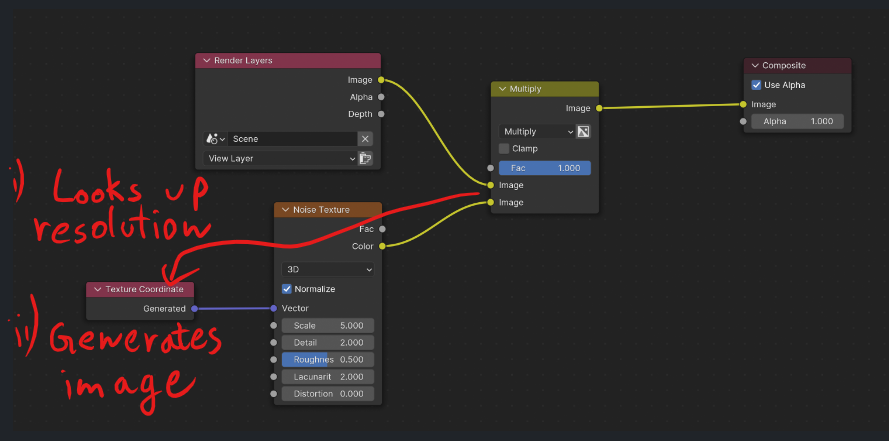

My proposal, instead, is to introduce two nodes, called the Image Info and Render Info nodes. The Image Info node takes an image and returns:

The Render Info node will have no inputs and returns:

Both nodes will have deterministic outputs that solely depends on their inputs or the scene. Furthermore, the extra information returned will be useful for procedural artists, and allow things like evaluating the texture in global space instead of local one.

Indeed the strict left-to-right notation is violated with the design. Something I should have mentioned a bit more explicit in the original write-up. It seemed overall lesser evil to achieve the desired artistic control.

There might be some tricks to make it more clear what resolution the nodes operate on, or maybe there is a trick to make it strict left-to-right.

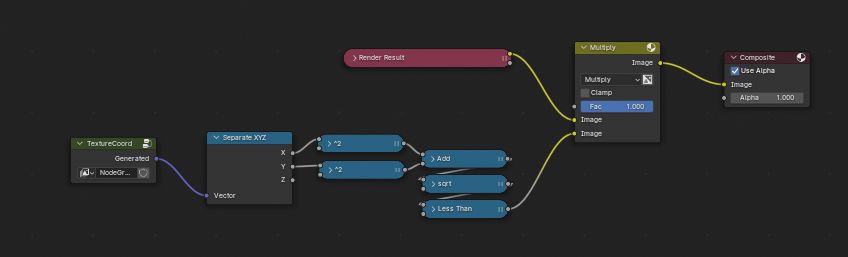

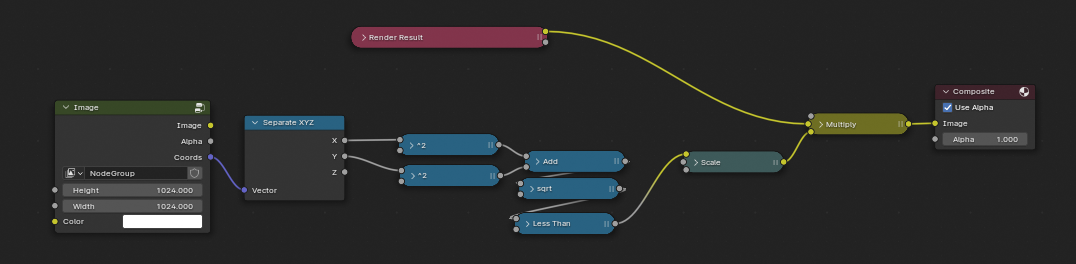

With your proposal I am not really sure how the Image Info node will be used. For example, if you want to generate some noise and multiply it into a render pass, how would you do it? With the current PoC patch it will be simple as:

On a user level ideally the Texture Coordinate will be implicit, without need to manually hook it up.

With your proposal would the "Texture Coordinate" be replaced with an "Image Info" and the render pass be plugged to both "Mix" and "Image Info" nodes?

That's correct.

To give a practical example on why the Texture Coordinates node can be confusing. Consider a setup where we are creating a procedural vignette and multiplying it with some lens dirt texture, the result of which is then applied to the render result. What will the Textures Coordinates node return? The texture space of the render or the texture space of the lens dirt texture? What if the user intention was the reverse of what the compositor did?

Further, consider the case when only the viewer output is connected, which is not constrained by the render size. Whatever behavior we choose in that case, the behavior will likely change when the user connects that to the composite output or another node tree components. Reports like #97385 could give you an idea on that confusing behavior.

So I really think we should go with a more explicit approach even if it means an extra link or node.

I can also prepare a test build for my proposal in case we want to do some practical testing.

I'd just note that the combination of left-to-right and right-to-left notation is handled by drawing field links differently in geometry nodes. One benefit of that design is share-ability, since reusable node groups can be built for procedural effects that don't need an image input.

I made a case for fields in shader nodes in #112547. I think that's worth exploring for the compositor too. Some of the same reasons like re-usability and high level control should apply here too. Depending on how things work right now though, that could be an extra visualization of existing behavior, or it might require changing existing behavior significantly. Anyway, worth considering IMO.

We could indeed use some of the visualization techniques employed by Geometry Nodes, since the compositor already have similar mechanisms internally.

However, my main issues with fields is with their implicit evaluation context, our data flow nodes are not as clear cut as Geometry Nodes, since they double as both function nodes and data flow nodes depending on their inputs. And even if data flow nodes are clear cut, evaluating the field at each of the data flow nodes is probably not the intention of the user. This was demonstrated in my test above.

Moreover, more generally, I think introducing fields in the compositor will complicate an intrinsically simple system that operates on images as objects. Noting that one should not assume familiarity with Geometry Nodes for the average compositor user.

Shaders are much simpler to adapt to fields because the field context is the shader context, so the user can even read the field from left to right with no loss of correctness. Though I do think that my knowledge is lacking in that area, so maybe the picture is a bit different than I image.

Slightly related but also slightly off topic, I think it would be beneficial to see a concept of Core nodes that are available in Shader, GN and Compositor to unify some aspects of node use for users. Code wise, some function/shader nodes are already implemented C++ and GLSL and can easily be ported to the compositor. A Core node concept could also be extended to Core features such as loops and groups/assets. I guess some challenges here are the data types and socket names that are used.

@CharlieJolly I think that is already the plan. And Sergey already prototyped something like this in #112733.

@LukasTonne @erik85 @HooglyBoogly In the process of solving this problem right now. In Volume context (see #103248, #110044, ... #103248 (comment) ).

But unlike volumes, it seems to me that it is still much easier to show an example with classical fields.

Set Positionnode from geometry nodes).Ignore the fact that statistics require explicit geometry input, this will be lost with the advent of field groups, and a lot of users don't like it

I think it is still possible to follow the explicit flow by plugging inputs explicitly. I think something similar happens in the Geometry nodes as well. I also believe with good visualization in the graph the confusion will be solved in a nice way.

To me it is more of a decision between sticking to something that is very familiar to the VFX artists (the strict left-to-right) and unifying behavior and principles with other node systems in Blender. The appeal of the former is that it is very easy for VFX artists to jump from their software of choice to Blender and be up-to-speed. The appeal of the latter is the flexibility and that artists do not need to learn details of multiple node systems in Blender.

I was busy with lots of other things since I've initially wrote this summary and did not have much time to move it forward. Hopefully very soon I'll be able to sit with Jacques and Hans and come to some aligned design! At the meantime thanks everyone for the input, it really does help :)

I'll add a use case where the user wants to do the Iris Out Effect and solutions to help with the decision, which I think will also make the compositor useful for procedural texture generation. Maybe these are better suited for the texture node editor, depending on how you guys are planning to rework it. One of the reasons the compositor isn't currently suited for procedural textures is that it lacks a 3d preview of height maps.

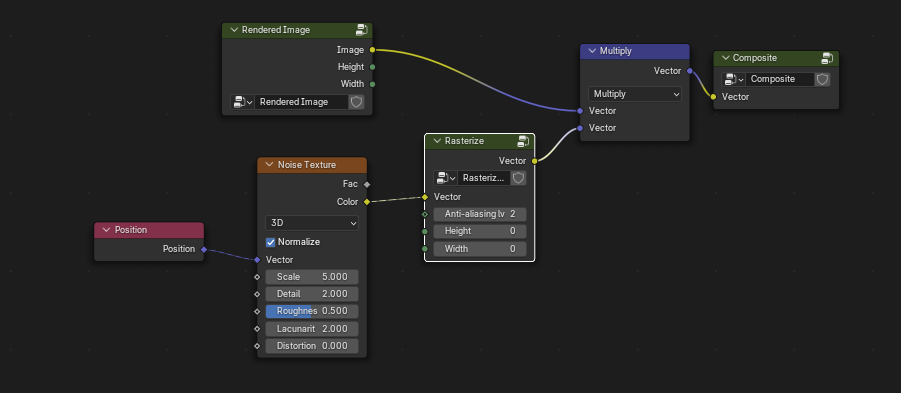

If we go with the geo nodes fields setup:

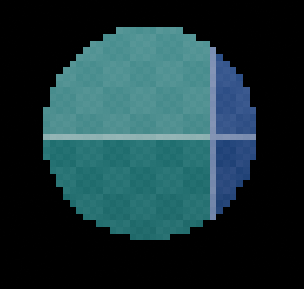

If we evaluate on the input image pixel domain, we'll get something like this:

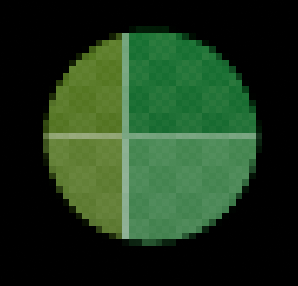

While the user might want something like this:

Now to give the user the choice, we can add an anti-aliasing setting somewhere (see rasterize node below) or they could do the below setup:

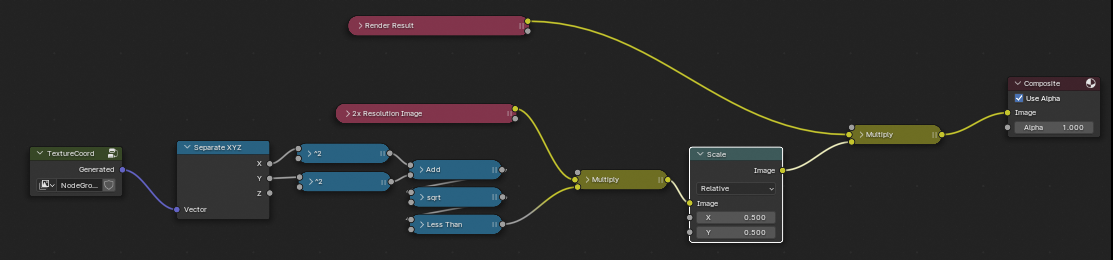

which evaluates on a higher resolution domain and then scales it to imitate antialiasing.

In my opinion, the forward only evaluation system shown below can be more intuitive.

\

Rasterize Node

Another solution is to differentiate between pixel image data flow and continuous functions. Then use a rasterize node to explicitly convert the function to pixel data. This would eliminate the intuition needed to look up the resolution from the nodes to the right in @Sergey 's PoC:

We can use diamond and circle sockets to differentiate between the two types:

Notice the anti-aliasing lvl input which eliminates the need to scale up and down.

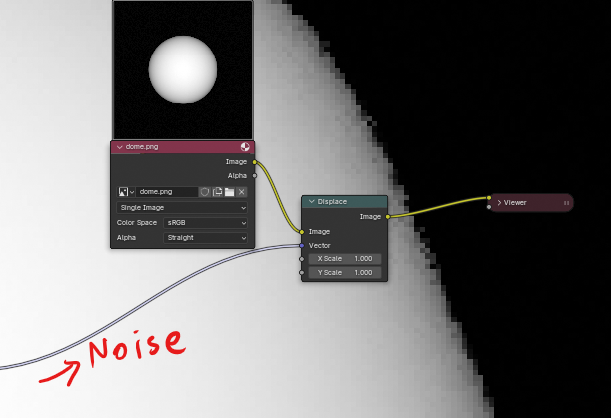

Warping and Execution domain

It is important to visually indicate on whose pixel domain a node works on. For example, the color mix node works on the domain of the first image plugged in.

Say a user wants to do a warp operation.

We can consider the displace node, it works on the input image pixel domain:

The result looks quite grainy. If the user wants to smooth it out the only option i can think of is using a higher resolution input image and then scaling it down.

As the noise texture is procedural, we can have a noise image with any required resolution if we have the rasterize node.

There should be a mechanism to let you execute the node on the displace vector input domain. Maybe a switch on the displace node or maybe another Offset Sample/Reverse Displace node.

@alan_void I feel like the proper solution in your use case would be to either use a smooth thresholding function or use the Anti-Alias node. The advantage of the former approach is that we won't have to rasterize the data beforehand.

Either way, inferring the coordinates or deriving it from an input image should work as you might expected, because the scale node would be properly taken into account when inferring the coordinates.