WIP: Fix: Respect Blender Cycles setting for GPU denoising #118841

No reviewers

Labels

No Label

Interest

Alembic

Interest

Animation & Rigging

Interest

Asset Browser

Interest

Asset Browser Project Overview

Interest

Audio

Interest

Automated Testing

Interest

Blender Asset Bundle

Interest

BlendFile

Interest

Collada

Interest

Compatibility

Interest

Compositing

Interest

Core

Interest

Cycles

Interest

Dependency Graph

Interest

Development Management

Interest

EEVEE

Interest

EEVEE & Viewport

Interest

Freestyle

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

ID Management

Interest

Images & Movies

Interest

Import Export

Interest

Line Art

Interest

Masking

Interest

Metal

Interest

Modeling

Interest

Modifiers

Interest

Motion Tracking

Interest

Nodes & Physics

Interest

OpenGL

Interest

Overlay

Interest

Overrides

Interest

Performance

Interest

Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds & Tests

Interest

Python API

Interest

Render & Cycles

Interest

Render Pipeline

Interest

Sculpt, Paint & Texture

Interest

Text Editor

Interest

Translations

Interest

Triaging

Interest

Undo

Interest

USD

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Interest

Video Sequencer

Interest

Virtual Reality

Interest

Vulkan

Interest

Wayland

Interest

Workbench

Interest: X11

Legacy

Blender 2.8 Project

Legacy

Milestone 1: Basic, Local Asset Browser

Legacy

OpenGL Error

Meta

Good First Issue

Meta

Papercut

Meta

Retrospective

Meta

Security

Module

Animation & Rigging

Module

Core

Module

Development Management

Module

EEVEE & Viewport

Module

Grease Pencil

Module

Modeling

Module

Nodes & Physics

Module

Pipeline, Assets & IO

Module

Platforms, Builds & Tests

Module

Python API

Module

Render & Cycles

Module

Sculpt, Paint & Texture

Module

Triaging

Module

User Interface

Module

VFX & Video

Platform

FreeBSD

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

2 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender#118841

Loading…

Reference in New Issue

No description provided.

Delete Branch "Sirgienko/blender:address_gpu_denoising_settings_respect"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Previously, GPU denoisers were ignoring settings about render

configuration and were using any available GPU. With these changes,

GPU denoisers will use the device selected in Blender Cycles

settings.

This allows any GPU denoiser to be used with CPU rendering.

@brecht Can you please take a look on this solution? It is about respecting Blender setting for GPU denoising device selection (both OptiX and OIDN). I think it make sense to get this fix to clarify UX for the users, but the patch is not small, so I would understand if you would want this to be postponed for 4.2.

Also, I could actually address this behavior with more easy to implement approach that "Device" UI option, which is defining device used for render, should affect denoising device as well - but then it would be not possible to use GPU denoising during CPU render, which may be not very popular configuration, but I would expected that some people may use it so it wouldn't be nice to remove this functionality even if wasn't intended initially.

A clarification about how it is working right now and how it suppose to work with this change.

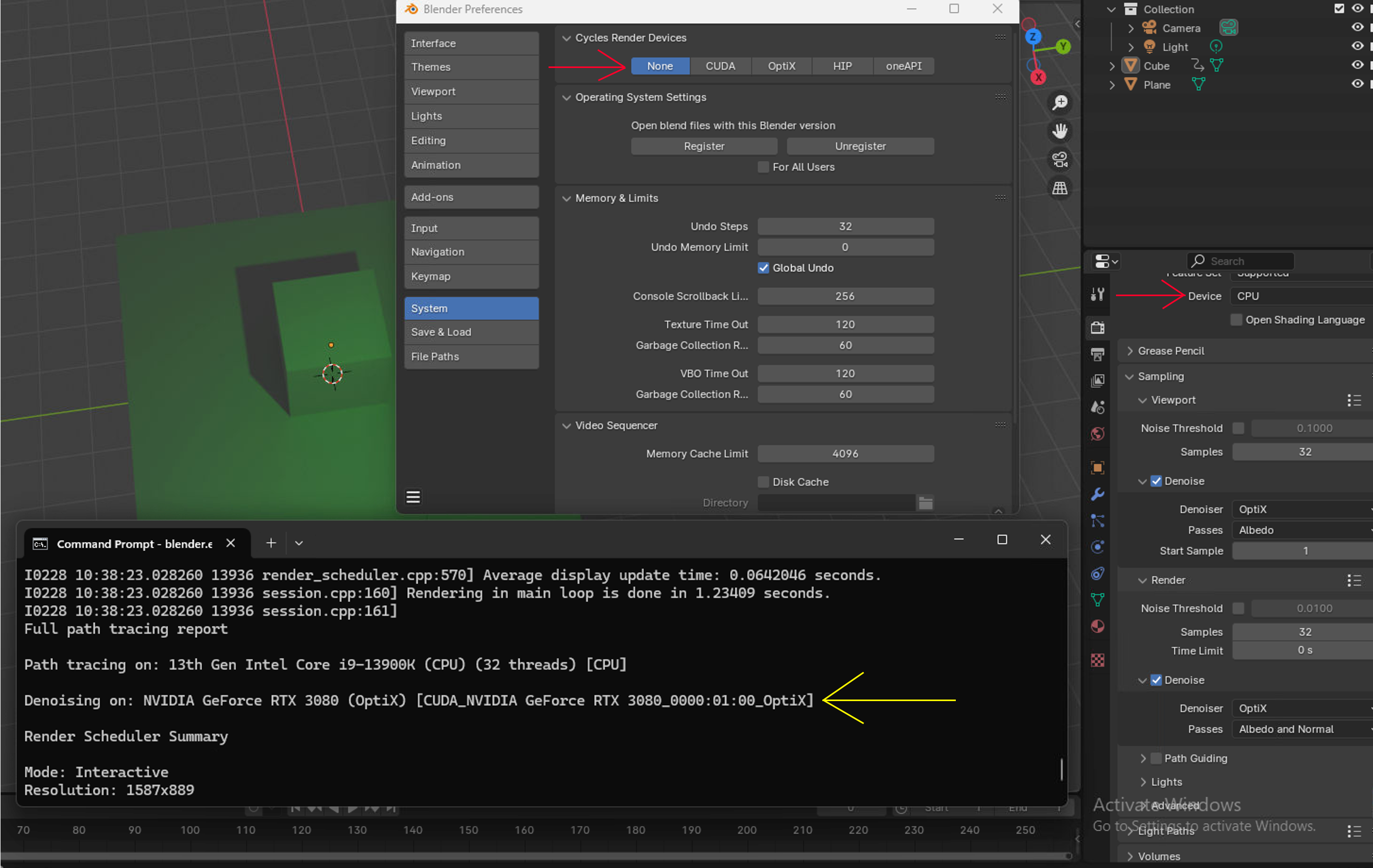

Current behavior - despite Cycles preference (red arrows), denoising is happening (yellow arrow) on first found GPU device:

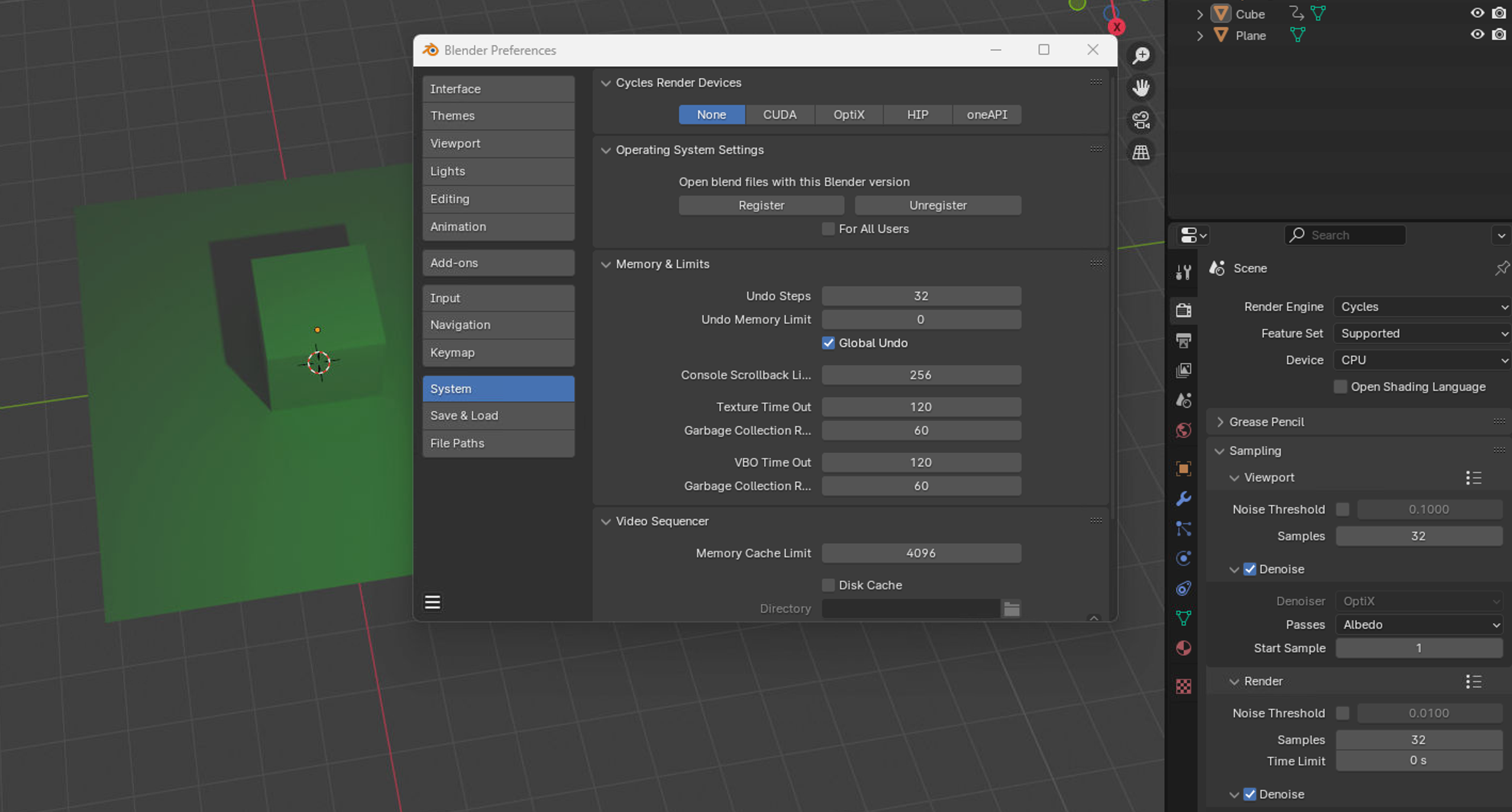

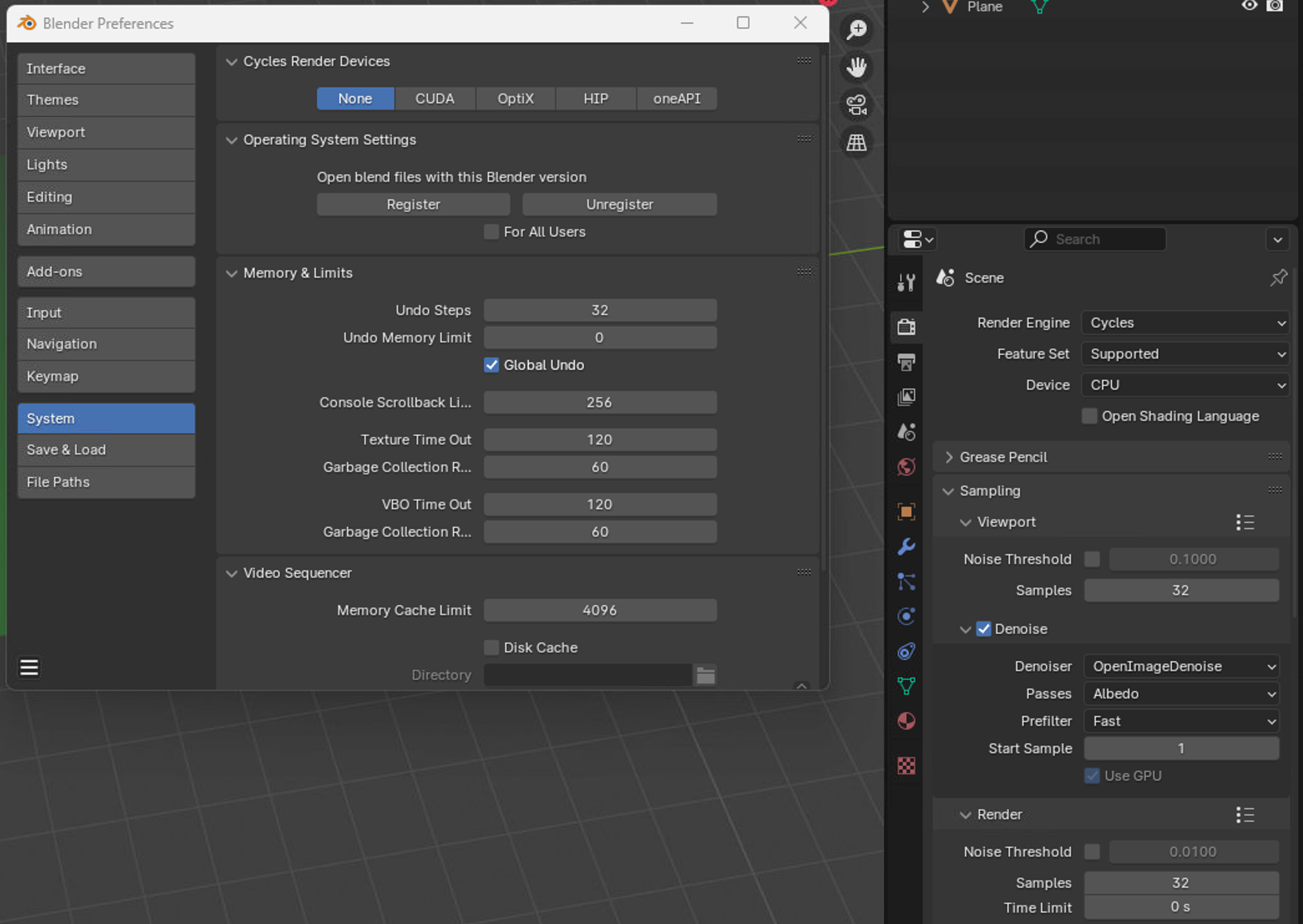

Behaviour with this changes - if None or CPU devices or GPU devices, which are not supporting GPU denoising are selected in the Cycles preferences, then GPU denoising options (Optix denoiser and "Use GPU" OIDN option) will be grayout out and won't be applied, as denoising will happen on CPU:

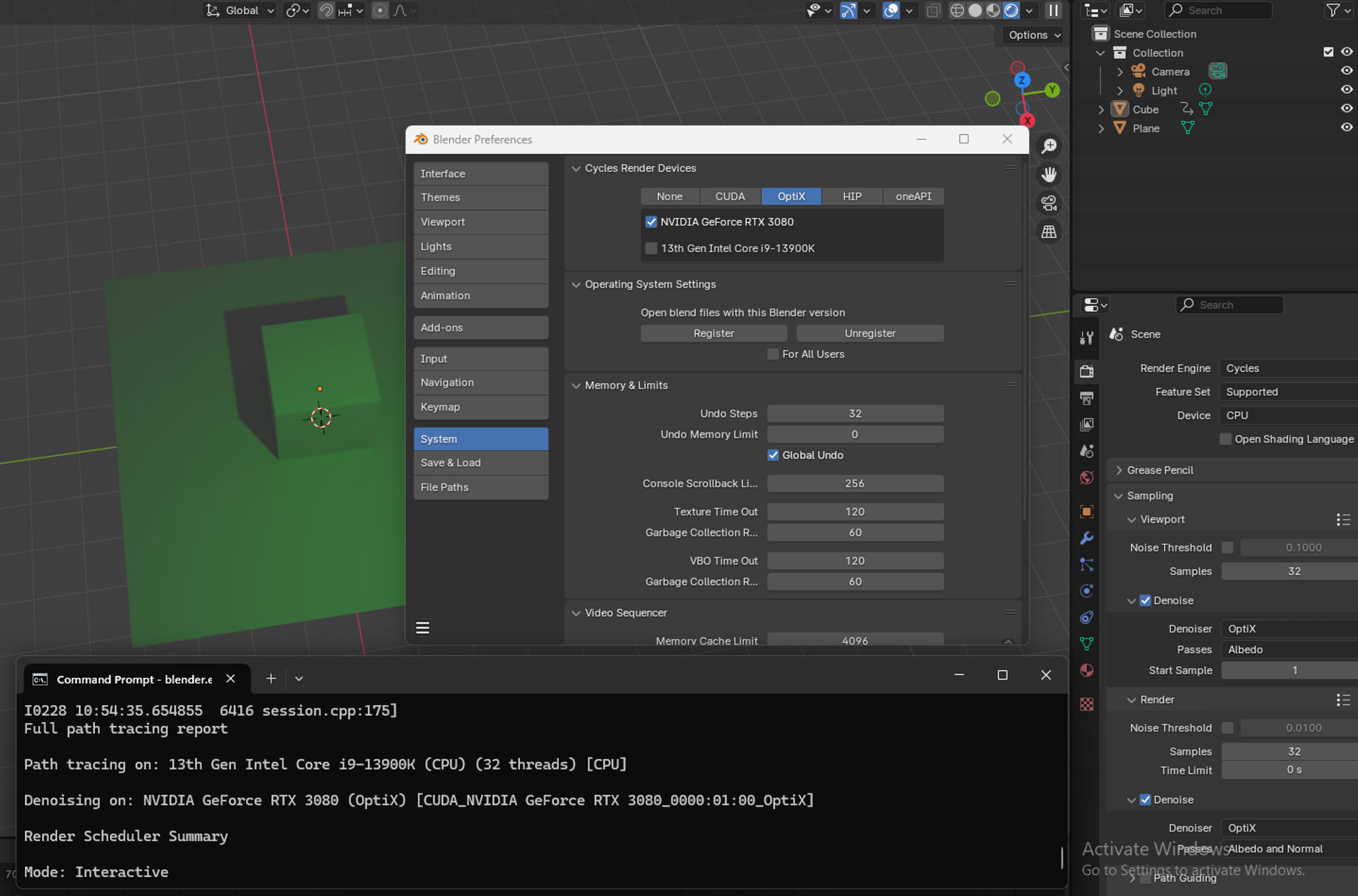

And when GPU devices, which is supporting GPU denoising, is selected in the Cycles preferences, then this GPU denoising options won't be grayed out and will apply according to the selection:

So in a nutshell: Right now there are a per-scene denoisers configuration options, which are not aligned with user's Cycles preferences and not respecting them. With this change, it will be addressed and GPU denoising device selection will work in the same approach as "Device: GPU compute" is working right now - preferences will determine which device will be used and if compatible device is not selected, then the UI option will be grayed out and CPU fallback will be used.

ffb4f5bdf6to07968d7e0e07968d7e0eto83b084ab3dThis seems too risky this close to the release, it's hard to get this properly user tested in such a short time. Particularly the support for CPU rendering I would rather postpone. If there is a bugfix we can consider it, but hopefully the change is simpler then.

This adds a denoise device in

Session, but there already exists alocal_denoiser_device_inDenoiser. Isn't it possible to use that instead?The way I understand the code in

Denoiser::ensure_denoiser_devicenow, is that it will already try to use one of the path tracing devices for denoising. So in that sense it respects the choice of GPUs, except if you happen to be path tracing with a GPU that doesn't support OIDN and have another GPU that does. We could change the logic so it doesn't do that, but it seems a pretty rare corner case.Understandable, and unfortunately this is the easiest solution which I have found so far, so I suppose it will be indeed change for the 4.2 LST, I will update milestone information accordingly.

No, I don't think that is possible to use only local_denoiser_device_, because

Denoiserclass is not taking into account Blender preferences and Scene settings when determining the local_denoiser_device ("Denoiser device created to perform denoising when none of the rendering devices are capable of denoising."). This is because the Denoiser class does not have access to these settings, as only few Blender Cycles classes and functions are having it - intern/cycles/blender/session.cpp and intern/cycles/blender/python.cpp, in particular.However, the

Sessionclass does have indirect access to preference information, as this data is passed in the form of aSessionParamsstruct to theSessionconstructor. And the class responsible for creating theSessionobject is expected to populate the SessionParams structure with the appropriate information based on the Preferences and Scene settings. This for example how it is done in theBlenderSession::create_session()method and in theBlenderSync::get_session_paramsmethod, which constructs the SessionParams structure.Essentially, once denoising is allowed on devices that differ from the path tracing devices, we should employ the same method used for path trace devices to ensure reasonable UI/UX behavior. The denoising devices should be determined based on the Cycles preferences and Scene settings by a class that has access to both. Then this information in a form of

DeviceInfoshould be passed through various Cycles classes, until it will reach the relevant class (in this case a class aboveDenoiserdue to memory management approach). The relevant class then will create a Device object from this information and thenDenoiserwill utilize thisDeviceobject for computations.Approach

it will already try to use one of the path tracing devices for denoisingis leading to the situation, that we could easily use GPU denoising with GPU path tracing and CPU denoising with CPU path tracing. But as soon as we want to have CPU render and GPU denoising -ensure_denoiser_devicewill work in quite strange way, in my opinion.For example, for OIDN,

ensure_denoiser_devicewill never able to find GPU device for denoising when path tracing is happening on CPU - because path tracing CPU device will be checked first inensure_denoiser_device, and CPU device (almost always) is supporting OIDN denoiser, so no futher search will be conducted.For the OptiX denoiser, this is not an issue because OptiX is not supported on CPU. However, there is still an uncontrollable aspect regarding which NVIDIA device will be used for GPU denoising when path tracing is happening on CPU. As I see, the code in "find_best_device" prefers an NVIDIA GPU which is connected to a display. This may be acceptable for the Blender's user, but if they prefer otherwise, then there is currently no way to control this behavior. Because the selection of an NVIDIA GPU is hardcoded in the source code.

And, as I have mentioned above,

Denoiserclass do not have access to the Cycles preference and Scene settings, so this information can't be used inensure_denoiser_device- as I see, this is a reason why user have so little control overensure_denoiser_devicebehaviour.Another issue, in my view, is that we never actually use the

Denoiser::ensure_denoiser_devicemethod directly; instead, it is called from its subclasses, such asOptiXDenoiser,OIDNDenoiser, andOIDNDenoiserGPU. In these instances, we have already partially determined the device we intend to use for denoising—if we create anOptiXDenoiserobject, for example, it implies we wantOptiXdenoising on an NVIDIA GPU. Therefore, I believe that ideally, we should not have theensure_denoiser_devicemethod at all. The decision on which device to use for denoising should be made at the same level where we decide which denoiser subclass to instantiate.I think we should not have both

local_denoiser_device_inDenoiser, anddenoise_deviceinSession, it should be one or the other. So if this denoiser device is added at the Session level, that should then fully replace the denoiser device detection and creation at the Denoiser level.It's hard for me to guess which way leads to the simplest implementation. But I'm guessing it's best to remove a bit of abstraction in Denoiser, and make

Denoiser::createresponsible for creating the denoiser device before theDenoiserinstance. That way the logic does not get spread out to the Session, and the confusing flow with subclasses creating the device is avoided.Fix: Respect Blender Cycles setting for GPU denoisingto WIP: Fix: Respect Blender Cycles setting for GPU denoisingCheckout

From your project repository, check out a new branch and test the changes.