Replace Default OCIO config with AgX (Filmic v2) #106355

No reviewers

Labels

No Label

Interest

Alembic

Interest

Animation & Rigging

Interest

Asset Browser

Interest

Asset Browser Project Overview

Interest

Audio

Interest

Automated Testing

Interest

Blender Asset Bundle

Interest

BlendFile

Interest

Collada

Interest

Compatibility

Interest

Compositing

Interest

Core

Interest

Cycles

Interest

Dependency Graph

Interest

Development Management

Interest

EEVEE

Interest

EEVEE & Viewport

Interest

Freestyle

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

ID Management

Interest

Images & Movies

Interest

Import Export

Interest

Line Art

Interest

Masking

Interest

Metal

Interest

Modeling

Interest

Modifiers

Interest

Motion Tracking

Interest

Nodes & Physics

Interest

OpenGL

Interest

Overlay

Interest

Overrides

Interest

Performance

Interest

Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds & Tests

Interest

Python API

Interest

Render & Cycles

Interest

Render Pipeline

Interest

Sculpt, Paint & Texture

Interest

Text Editor

Interest

Translations

Interest

Triaging

Interest

Undo

Interest

USD

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Interest

Video Sequencer

Interest

Virtual Reality

Interest

Vulkan

Interest

Wayland

Interest

Workbench

Interest: X11

Legacy

Blender 2.8 Project

Legacy

Milestone 1: Basic, Local Asset Browser

Legacy

OpenGL Error

Meta

Good First Issue

Meta

Papercut

Meta

Retrospective

Meta

Security

Module

Animation & Rigging

Module

Core

Module

Development Management

Module

EEVEE & Viewport

Module

Grease Pencil

Module

Modeling

Module

Nodes & Physics

Module

Pipeline, Assets & IO

Module

Platforms, Builds & Tests

Module

Python API

Module

Render & Cycles

Module

Sculpt, Paint & Texture

Module

Triaging

Module

User Interface

Module

VFX & Video

Platform

FreeBSD

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

15 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender#106355

Loading…

Reference in New Issue

No description provided.

Delete Branch "(deleted):main"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Note: some info here is outdated, for updated info, see the GitHub repo:

https://github.com/EaryChow/AgX

What?

Eary's AgX is an OCIO v2 configuration made with the intention to replace Blender's default config, to give Blender a better color management and image formation for the upcoming Spectral Cycles.

The config was built more specifically for Blender, but other software that supports OCIO v2 should also be able to use it.

The two featuring image formations (view transforms) are Guard Rail and AgX. Guard Rail is targeted as a replacement for Blender's "Standard", while AgX is targeted as a replacement for "Filmic".

"AgX" the name is a pseudo-chemical notation of silver halide, commonly used in photographic film, therefore, AgX is an alias of Filmic.

AgX is similar to Filmic as a sigmoid-driven formation, while Guard Rail is a minimalist image formation that broke-out from AgX, that only touches values out of the valid [0, 1] target display medium range.

AgXImage formation does two thingsThis config also comes with a different colorspace naming scheme, but with backwards compatibility setup with OCIO v2 feature of aliases, so that texture colorspaces in old .blend files will get auto-converted to the new names.

Three of the frequently asked space names are:

̶s̶R̶G̶B̶ ̶2̶.̶2̶̶,̶ ̶t̶h̶i̶s̶ ̶c̶o̶r̶r̶e̶s̶p̶o̶n̶d̶s̶ ̶t̶o̶ ̶t̶h̶e̶ ̶l̶e̶g̶a̶c̶y̶ ̶̶s̶R̶G̶B̶̶Linear BT.709 I-D65, this corresponds to the legacyLinearWhy?

Because the current Filmic has issues like the Notorious Six, meaning Filmic collapses all colors into six before attenuating to white. Filmic also doesn't have the capability to handle wider gamut render produced by wider working space, spectral rendering, real-camera-produced colorimetry etc.

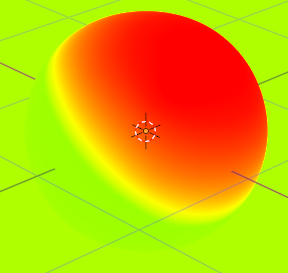

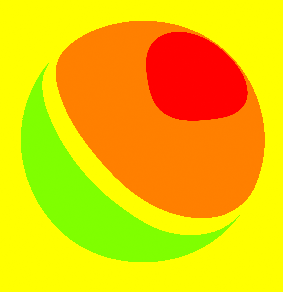

AgX (Left) vs Filmic (Right)

View Transforms

The config includes the following view transform:

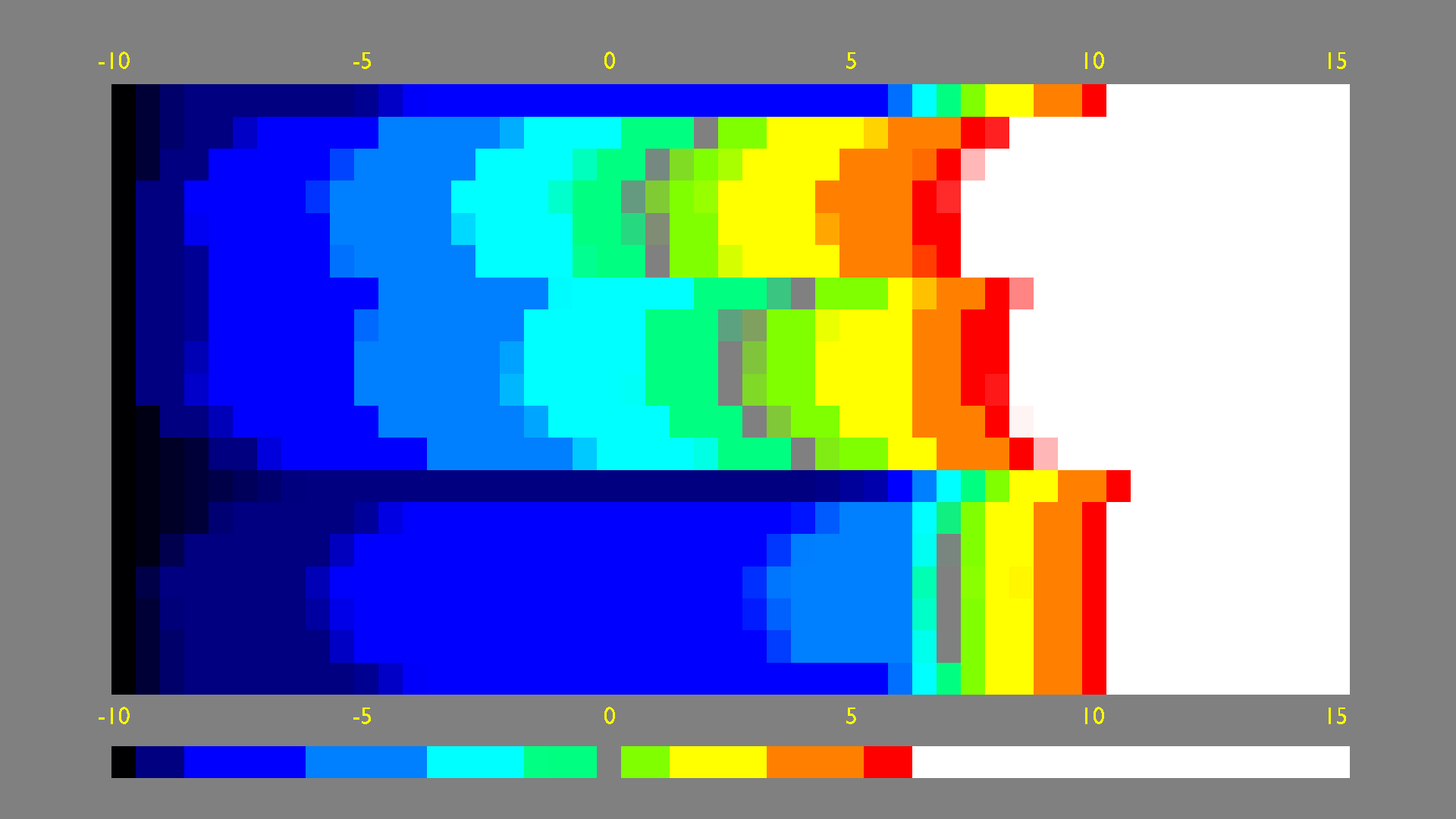

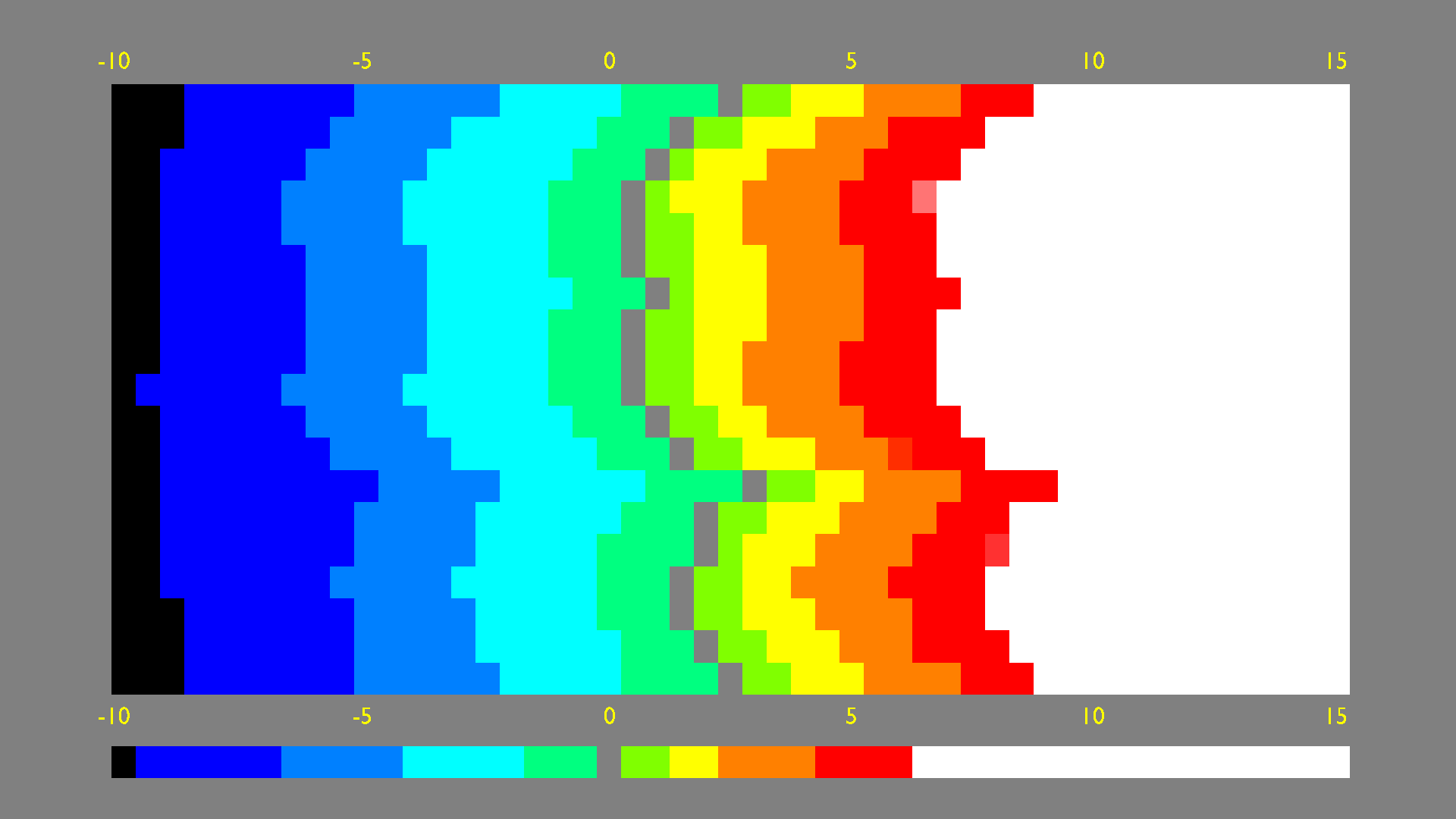

Guard RailThe minimalist image formation that only touches "the invalid", replaces Blender's legacy "Standard".AgXThe Filmic-like sigmoid based image formation with 16.5 stops of dynamic range.AgX LogThe Log encoding with chroma-inset and rotation of primaries included. Uses BT.2020 primaries with Log 2 encoding from-12.47393to12.5260688117(25 stops of dynamic range) and I-D65 white point.AgX False ColorA heat-map-like imagery derived fromAgX's formed image. uses BT.2020's CIE 2012 luminance for luminance coefficients evaluation.False Color ranges

Different from False Color in current Blender or the Filmic-Blender config, the false color here is a post-formation closed domain evaluation. Therefore, all values below will be linearized 0 to 1 value written in percentage.

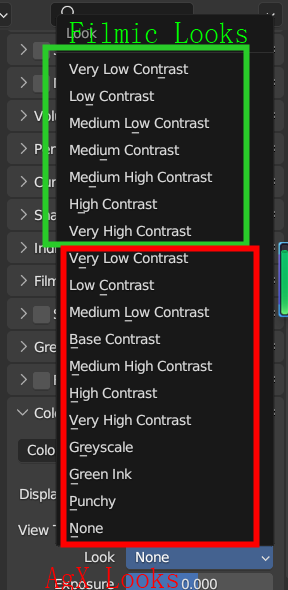

Looks

"Looks" are artistic adjustment to the image formation chain.

PunchyA contrast look that makes the image look more “punchy” by darkening the image.GreyscaleTurn the image into greyscale. Luminance coefficients are BT.2020’s CIE 2012 values, evaluated in Linear state.Seven Contrast Looks. Similar to Filmic’s contrast looks. All operates in AgX Log, with pivot set in 0.18 middle grey. All using OCIO v2’s

Grading Primary Transformfeature, meaning you can customize your contrast just by editing the values in the config.Colorimetric Information

ReferenceEvery OCIO config has their own reference space, all other spaces are defined with how they transform “from” and/or “to” the reference space. While Blender’s previous config has been usingLinear BT.709 I-D65as reference, this config uses1931 CIE XYZ E white point chromaticityas reference. This is a sane decision, since CIE XYZ is the root for everything else color management related. FilmLight’s TCAMv2 config also has CIE XYZ as reference.AgX Base image formation spaceThe AgX in this config has one single image formed in the BT.2020 display medium, then the images for other mediums are produced from the formed image in BT.2020.Supported Image Display Mediums:

sRGB, Generic sRGB / REC.709 displays with 2.2 native power functionBT.1886, Generic sRGB / REC.709 displays with 2.4 native power functionDisplay P3P3 displays with 2.2 native power function. Examples include:Apple MacBook Pros from 2016 on.

Apple iMac Pros.

Apple iMac from late 2015 on.

BT.2020BT.2020 displays with 2.4 native power function.It's very unlikely someone would use a BT.2020 2.4 display as of now, but since we have the image formed in BT.2020, supporting it is just a "why not?" thing to do.

ColorspacesThis config supports the following colorspaces:

Linear CIE-XYZ I-EThis is the standard 1931 CIE chromaticity standard used as reference.Linear CIE-XYZ I-D65This is the chromatic-adaptated to I-D65 version of the XYZ chromaticity. Method used isBradfordLinear BT.709 I-EOpen Domain Linear BT.709 Tristimulus with I-E white pointLinear BT.709 I-D65Open Domain Linear BT.709 Tristimulus with I-D65 white pointLinear DCI-P3 I-EOpen Domain Linear P3 Tristimulus with I-E white pointLinear DCI-P3 I-D65Open Domain Linear P3 Tristimulus with I-D65 white pointLinear BT.2020 I-EOpen Domain Linear BT.2020 Tristimulus with I-E white pointLinear BT.2020 I-D65Open Domain Linear BT.2020 Tristimulus with I-D65 white pointACES2065-1Open Domain AP0 Tristimulus with ACES white pointACEScgOpen Domain AP1 Tristimulus with ACES white pointLinear E-Gamut I-D65Open Domain Linear E Gamut Tristimulus with I-D65 white pointsRGB ̶ ̶2̶.̶2̶sRGB 2.2 Exponent Reference EOTF DisplayBT.1886 ̶ ̶2̶.̶4̶BT.1886 2.4 Exponent EOTF DisplayDisplay P3 ̶ ̶2̶.̶2̶Display P3 2.2 Exponent EOTF DisplayBT.2020 ̶ ̶2̶.̶4̶BT.2020 2.4 Exponent EOTF Display̶G̶e̶n̶e̶r̶i̶c̶ ̶D̶a̶t̶a̶̶Non-ColorGeneric data that is not color, will not apply any color transformNote:

I-Eis short for “Illuminant E”,I-D65is short for “Illuminant D65”.The use of I-E white point

The main reason for supporting the I-E version of the spaces is to be prepared for the upcoming Spectral Cycles. Spectral renderers with capability to input RGB textures require an I-E based RGB working space to ensure an error-free spectral reconstruction/upsampling process.

Note for using Eary’s AgX for Spectral Cycles: Remember to change the XYZ role to the I-E version of the XYZ chromaticity.

610788d62bThanks, AgX looks great.

Filmic should still be included in the default Blender config for backwards compatibility, even if only supported for the sRGB display.

Color space names like "Non-Color" and "sRGB", and view names like "Standard" should not be changed. It can be debated what the right name is, but I don't see a reason to move to another naming convention, especially if that convention is different than other applications or the new standard OCIO configs.

Filmic sRGBthe space is still there, but my concern is people might use Filmic view with AgX looks, they shouldn't be used together, so I only included the space, but not listing it in the views.Legacy names are in aliases so backwards compatibility should be good.

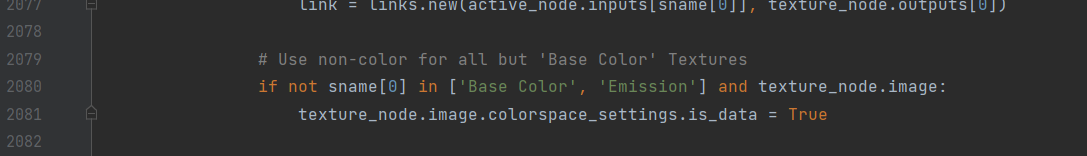

Non-Colorhas been one of the most misunderstood colorspace names, many people actually believe "Non-Color" means black and white textures.Generic Dataactually states what it is, the space hasisdata: true, and has been referenced that way in the python API as data as well.Example would be in node wrangler:

It's the good chance to get rid of unclear and easy-to-misunderstand names.

As for

sRGB 2.2, the reasoning is to make it clear that we are not using the piece-wise sRGB that curshes shadows for legacy CRT displays, and instead using the power function for the modern style color management. But this one I am fine to change back to sRGB if you insist.I have removed the power value number from names like sRGB, Display P3, etc. But for

Generic Data, I am unwilling to change it, reasoning has been stated above.Filmic must remain available as a view transform still for there to be backwards compatibility. Maybe something needs to be done to ensure looks are only available for appropriate view transforms.

I understand there are arguments for everything to be named one way or the other. But we have naming conventions and guidelines in Blender that should be followed, and that are not up for debate now. Color space debates tend to be very time consuming and I don't want to do that.

The convention is that we should name things by their purpose or meaning for users and not their technical implementation. We should also follow industry standard names when possible. Therefore, "sRGB 2.2" is too technical and we should not invent a new term like "Generic Data". These are just two examples, all names in the configuration should be evaluated based on this.

aces_interchangeshould be added to this configuration, and ideallycie_xyz_d65_interchangeas well.aces_interchangecurrently overwrites the XYZ role, causing problem for Spectral Cycles to access the XYZ I-E chromaticity. We can add it back after the XYZ chromaticity in the render engines is using XYZ role again.Render engines should use

aces_interchangefor determining XYZ chromaticity. Using theXYZrole was only done because there no standard OCIO role to determine this. But now there is and that's what should be used.I still disagree but I can add it back. Note Spectral Cycles requires XYZ chromaticity with I-E white point, the current

aces_interchangerole doesn't deal with that. Keep in mind we might need to deal with that in the future.I believe I have addressed all the comments.

This would be a very welcome change.

For now though, since that is not yet avaliable, should the Looks be named such that it's obvious what they connect to? So for instance

"Very High Contrast - Filmic"

"Very High Contrast - AgX"

or perhaps the reverse

"Filmic - Very High Contast"

"AgX - Very High Contrast"

Otherwise, if both AgX and Filmic are there, it's gonna be difficult to differentiate which Looks to pick

The current config does not have Filmic looks in there, if you want to go down that route, you need to make sure all other views can still use those looks but for Filmic, the looks are empty. But from another angle, you can still use those Looks with Filmic, just keep in mind those looks were not designed with Filmic in mind therefore the High contrast look + Filmic is not going to look the same as it is in Blender 3.5.

Looks are artistic adjustments anyways, it's not as strict nor as technical.

The Filmic looks are needed for backwards compatibility. We also can't just change their name to add

- Filmicsince that breaks compatibility too. So some solution in the code is needed.I've tested the version of AgX you published on your Github, and found a couple issues/regressions.

Main issues I've found are with picking colors.

For example inputting an RGB of 1.0, 0.0, 0.0 results in #FF150B, and inputting #FF0000 sets the RGB to 0.887905, 0.0, 0.0 as well as immediately changes the hex value. This doesn't occur in either Standard or Filmic in the "Vanilla" configuration.

Additionally while using Guard Rail, shadeless materials (emission shader with strength set to 1.0) do not display the actual color, as evident from color picking giving a slightly different value. This also didn't happen in Standard view transform in Vanilla.

Please let me know if this is intended/by design, and if so, how one can work around it. I can see this as a major issue for people who do flat graphics and video editing.

HEX values are not robust and universal, but rather the opposite, it's the enemy of proper color management, it's colorspace-unaware and does not tie to the root XYZ chromaticity. It's a bad idea to assume a HEX code holds some universal meaning across every software, because they don't.

It's designed to be almost the same, it can be a little bit different for two reasons, 1. the current working space is I-E based, for preparation for Spectral Cycles, therefore the output result would be adaptated to I-D65 for the display device. 2. Can be because of LUT precision. Though it's still there, I have tried my best to minimize the LUT precision issue, I reckon people won't really see the difference, like between

1.0and0.99by eyeballing.I can assure you the difference is small enough:

I remember we have a hack back when Filmic was first introduced, adding

Filmic -in front of the look name would make the look only appear when choosing Filmic. Maybe we can use that trick to bring back Filmic looks first, and then specified in the code to only show looks withFilmic -in front when choosing Filmic, so AgX looks won't appear when choosing Filmic?I added back the Filmic Look LUTs, but not in the config yet. Will wait until the code specifies to only show looks with

Filmic -in front when choosing Filmic.Hex values in Blender should be sRGB. It doesn't mean every application necessarily interprets it that way, but it has a well defined meaning in Blender and should work as before.

Video editing should not be compromised by future plans for spectral rendering. It's not enough for the difference to be small enough that a visual inspection doesn't make it clear, there should not be subtle data loss in unexpected places at all. There can be some error from floating point precision, but 0.01 is too much.

To take full advantage of spectral rendering a wider gamut is needed anyway. I don't think we should switch this one to be I-E based if it causes such issues.

I am sure none of these use cases will be negatively affected by the I-E working space. It's just the nature of any different working space, you can't expect the open domain scene-linear value to be exactly what the view tranform outputs. Let's say ACEScg as working space, it has a non-CIE standard weirdly decided white point (it's not D60) with primaries no monitor can produce, no view transform can output 1:1 the value. Users just need to understand that open-doamin scene-linear value 1:1 output as a view transform is not what we are looking for.

As far as replacing "Standard", the current Guard Rail will do the job. Guard Rail does not replace the technical sRGB conversion, it only replaces the view transform, the image formation.

Realistic rendering is not the only use case for Blender. If for example #FF0000 sets the RGB to 0.887905, 0.0, 0.0 that is a negative for important use cases.

We can add additional view transform, displays and color spaces now. However changing the working space is not something we can do without user control.

OK I can change the working space back to I-D65 for now. But note for Spectral Cycles, I-E working space is an absolute must, we are just delaying the decision to when Spectral Cycles's turn comes.

It feels like that just letting the user switch between spaces (or maybe automatically do it when switching to spectral mode, when that gets implemented?) seems like the obvious solution. Is there something that makes that hard to implement?

It's been said that:

@xZaki raised some concerns about this, but there's no data from a real-world NPR workflow on this PR yet. I've done some quick testing on this image, made in Blender using emissive shaders on grease pencil and mesh:

(Note that it's been heavily compressed for upload). I sampled the values of the skin tone, the dress, the earring metal, and the earring highlight both with Standard and with the proposed Guard Rail, using an external (non-Blender) color picker.

In every case except pure gray, Guard Rail causes significant changes to the color data.

Again, the goal of view transform is not technical sRGB conversion, it's instead, image formation. Therefore as long as visually extremely similar, it's acceptable.

Guard Rail is not meant to replace the technical sRGB conversion. I'll say it again. That's why I hide Guard Rail from the colorspace list.

Technically it's completely impossible to have the 3D LUT completely not changing anythying, I just tried to increase the 3D LUT resolution to 129 (max resolution Blender supports, file size 63.4 MB) and it still wouldn't work like that. Even though I already set a "bypass if within [0, 1]" in the python script, the generated 3D LUT just wouldn't completely unchange the color, it's just impossible due to 3D LUT precision. It would work if I could set the "bypass the LUT if within [0, 1]" within the config instead of the python LUT generator, but sadly OCIO doesn't have this feature.

The inclusion of the "Standard" view transform has actually never been a standard practice, in fact, none of the ACES configs has it, TCAMv2 config doesn't have it. It's existance should not be taken as a technical sRGB conversion.

Again, think of a BT.2020 or ACEScg working space, in former's case you can only see the straight output in an HDR projector, in latter's case you will never be able to see a straight output. The function a view transform takes has never been "keep the color data unchanged"

At this rate, if you just want a simple sRGB conversion, doing it in compositor or using the

Gammaof 2.2 setting with view set to none might be a better choice, though expect the latter solution to break when we eventually change the working space to something else. It's fine for now as the working space has been set to Linear BT.709 I-D65.And be aware my GitHub version is still using I-E working space. Use the version in this PR if you want the I-D65 working space.

Not for all use cases. The United Way, for example, has a zero tolerance policy for hex color deviations, and they color check every graphic. This PR makes Blender unusable for any case involving this organization, and that's just the one I am aware of.

Ok I added the sRGB display's native back as

Legacy Standard. Design-wise I am not comfortable with this decision. I hope the community in the long run can get rid of the out-dated practice of using sRGB inverse EOTF as view transform. Guard Rail is a step forward in this sense.I just added a hack to compensate for Guard Rail sRGB's chroma lost from LUT precision. Plus added back the

Legacy Standard, the issue reguarding that should be over now.It's not clear to me what Guard Rail does and what its purpose is, the name or description does not make it clear to me.

The main use cases we need to cover are:

In which use cases would you recommend using it, and to achieve which goal?

Renaming Standard to Legacy Standard breaks compatibility. And I don't think calling it "legacy" is good because I think it's a legitimate to want to pass colors through Blender unchanged. The reason it was called "standard" is because it does exactly the standard EOTF and nothing else.

According to wikipedia, the study of image formation encompasses the radiometric and geometric processes by which 2D images of 3D objects are formed.

That is not the only purpose of view transforms and color management in Blender. You also want to be able to take as input an image that has already been formed or that never was based on 3D objects to begin with, edit it, and then output it to the same or another display device without changing the look in any way.

Guard Rail's design principal is to keep the valid range of [0.0, 1.0] completely the same as "Standard", and deal with out-of-display-range values gracefully. Therefore in theory, in our original design, it would have been a perfect replacement for "Standard", it's supposed to be the "Standard" that does not produce the Notorious Six.

Guard Rail is part of AgX, in that AgX's BT.2020 version needs to go through the "negative handling" part of Guard Rail before going through the sigmoid, and then the sRGB version of AgX is produced from the BT.2020 version going through sRGB's Guard Rail. Guard Rail is our tool to deal with out-of-display-range values.

The problem though, as I explained, is that 3D LUT doesn't take in a value and output it like nothing happened, although our color processing in python script did have [0.0, 1.0] range completely the same as "Standard", when we produced a 3D LUT from it, the LUT precision is shifting the color just a little bit. Visually the difference is small enough for most cases to be ignored, but for people using color pickers and compare the HEX code, it's surely different.

I compromised now, I renamed

Legacy Standardback toStandard, and Guard Rail back toGuard Rail.I would still say, for people that stopped using Filmic because of it's "too grey", try Guard Rail out, you may like it.

I don't understand how it can be both exactly the same as standard in the [0.0, 1.0] range, and still give significantly different Hex colors which are always within the [0.0, 1.0] range.

Unless that was all explained by the I-E and I-D65 difference and the Guard Rail in this config does exactly give the same Hex color (or maybe off by one bit due to some precision issue).

If so we may only need one, or consider making Guard Rail the default in the grease pencil template.

Again, it's because of 3D LUT precision. If there is a way we can implement it algorithmically instead of using a baked 3D LUT, it would be exactly the same. But for now we can only use the LUT.

But it would still be a valid option as view transform, especially those people in DevTalk saying their renders are ruined by Filmic "greying out". AgX uses the same kind of sigmoid so it would still "grey it out", Guard Rail would be a good option for them.

This is a good idea actually, especially as GP can be influenced by lighting and get values above 1.0. Or the future potential of wider gamut painting? Guard Rail also deals with wider gamut gracefully.

810a49c241Made a mistakeIf the only remaining difference is the 3D LUT precision, I imagine it's possible to improve the precision by factoring the EOTF out of the LUT. Or maybe doing a remapping before and after the LUT to allocate a bigger portion to the 0..1 range.

The 2.2 EOTF is already out of the LUT. Remapping before and after the LUT is already there as well, I decided not to use the log 2 curve because people reported the log 2 curve caused a shift of 0.0 lower bound value due to precision. I am now using St.2084 10nits curve + 1.5 power curve, seem to work the best so far.

We also need the LUT input encoding to be rather wide gamut, especically include those negative luminance non-sense virtual values that real cameras tend to produce, therefore I used E-gamut. But larger input gamut also paid a toll on precision.

Will continue to investigate better options.

It may be possible to align 0 and 1 exactly to grid vertices in the 3D LUT in such a way that values in that range get exactly linearly interpolated, making precision error very small.

I tried some method to minimize the precision error, now the error should be extremely small. Welcome testing.

Tester has reported posterization in wider gamut after the matrix commit. Will revert it.

83e5bd2a8fI updated the LUTs to improve the LUT precision.

Trying out the new version (should've really uploaded it to your github, was a bit of a pain to download each file individually). Omitted the lowercase "luts" folder since Windows physically didn't let me make it in the same folder as LUTs.

Precision on Guard Rail has seemingly improved.

Standard while using the AgX config does is very slightly less precise than in Vanilla Blender, though that might be due to me being unable to replicate the config as the statement above.

Overall i do hope algorithmic LUTs can be attempted at some point as previously mentioned, but time for 3.6 is running short, and i do really hope AgX can still make it this release.

It's not "less precise", I have already mentioned before but again, it's a design decision to:

If you use sRGB textures, the import export should be the inverse of the same function so there should be no change regarding that; If you are directly inputing the linear sRGB values, the end result you see on monitor is either:

sRGB 2.2 values being displayed on piece-wise calibrated monitor, shadow gets a little tiny bit brighter

sRGB 2.2 values being displayed 2.2 calibrated monitor, displayed as intended.

VS if we use piece-wise function:

I remember Filmlight did a survey about whether people calibrate to 2.2 or piece-wise more, the result, IIRC, was that most people nowadays calibrated their monitors to pure power function, instead of piece-wise function.

Also, shadows looking a bit brighter seem to be a better risk to take than crushed shadows, it's also a risk that is less likely to happen since most people calibrate their monitors to pure power function.

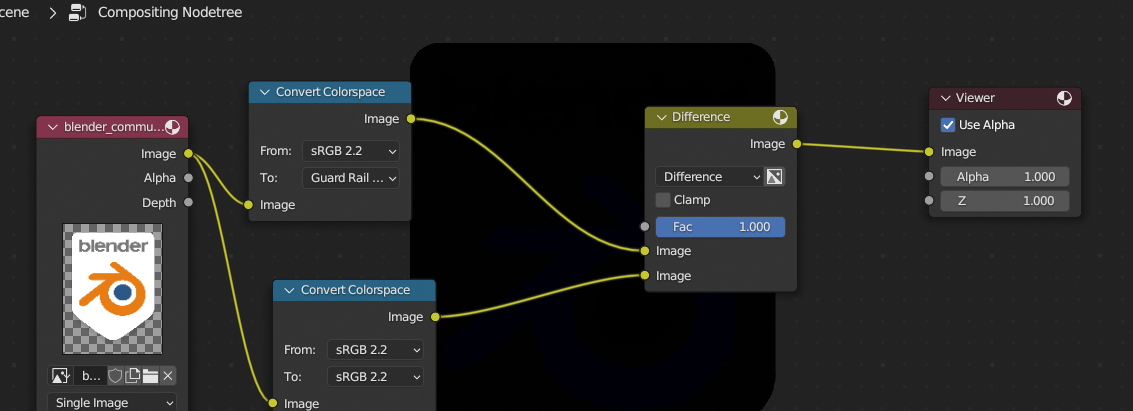

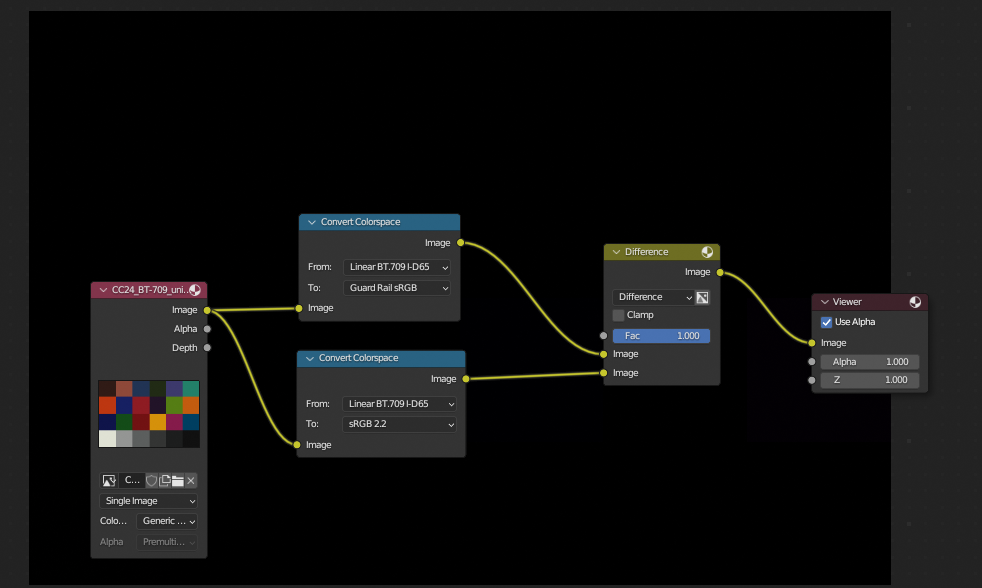

I believe I have done all I can do right now. If I am not mistaken, the only thing left is Filmic Looks. I have been waiting for the code change Brecht mentioned, since if I just do anything in config without code change, this would happen:

Both Filmic Looks and AgX Looks are present at the same time when choosing Filmic, which is confusing.

I think they need to just implement a "hide non-Filmic looks when using Filmic" or something, I remember the same thing being done to 2.79, not really sure though because nothing has been comfirmed to me yet regarding how they are trying to do this.

@brecht Am I correct on the plan regarding Filmic Looks?

Just for the record, AgX is perfectly ready in my view point, the only thing I am waiting for is the code change that Brecht mentioned, so that we can bring back Filmic Looks. After that, at least from my current understanding, we should be able to merge immediately, I have been modifying things according to requests all along, if Filmic Looks is not the only left, I don't know what we are waiting for.

Or if we can delay it to 4.0 or some version where we can drop some backwards campatibility, in that case we can just drop the support for Filmic Looks. It's not that the new contrast looks are going to be very very very different from Filmic's contrast looks anyways.

But I need comfirmation on the plan we are going towards.

AgX False Color is completely posterized, unlike in vanilla Blender where it has a smooth transition. I assume this isn't intentional.

It is actually intentional, the entire point of the false color in the first place is to posterize for better visualization of different zones.

The previous False Color Blender used was 3D LUT. The 3D LUT might create some smooth transition because of 3D LUT's precision issue. After changing to 1D LUT, the intentional posterization is more nicely kept, though you can still see some smoothness in the extremely low ranges.

@Eary AgX looks absolutely awesome!

Apart from that, given that False color in Blender still appears to be different from the Filmic master on GitHub #98405 shouldn't we also invite @troy_s to clear things up?

Otherwise I have to feeling that adding yet another View Transform is going to result in Color Management chaos for the average user, where to the already existing 2 versions of Filmic, there will be AgX which for most users will also look like some version of Filmic additionally .

I made AgX False Color completely from scratch, with our previous conversation about False Color in mind. Specifically, previous False Color has some problem with the luminance coefficients being evaluated in Log state, instead of Linear state, we also had talked about how it could have been just a 1D LUT.

And this is exactly what I did in AgX False Color, for reference, here is Filmic False Colour with Luminance Coefficients evaluated in Filmic Log:

Here is AgX False Color done in post-formation Linear state:

It is different from the Github's Filmic False Colour, but it makes more sense.

FIlmic is there in the menu for backwards compatibilty concerns. Users are encouraged to use AgX once it's merged, unless their personal aesthetic taste somehow dislike AgX, I guess it's fine then.

If chaos is a concern then maybe just hide Filmic by default under experimental as a toggle, like the Legacy Undo?

Adding back Filmic to the view transform menu was requested by Brecht, I believe the idea is to have old .blend files being kept completely the same as before, to make it able to auto select Filmic upon opening old files.

@Eary Personally I don't see a reason why Filmic should be removed. It works and is liked by many people, and sure AgX may have it's advantages over Filmic but some people may just prefer the look of AgX or Filmic.

So I think that simply adding AgX to the View Transforms is better than outright replacing Filmic. It's not like we've got that many options to choose from right now anyway.

A while ago I stumbled upon Feedback / Development: Filmic, Baby Step to a V2? I take that this is the Pull Request relating to it?

Not sure what we are arguing here, as I said, "adding back Filmic to the view transform menu was requested by Brecht", that request has been fullfilled for a couple of months now.

Yes.

I'm not trying to argue with you, I just wanted to say that I also think that it was the right choice. :-)

So, are we going to have AgX (Filmic v2) in the Blender 3.6 release?

Blender 4.0 at the earliest.

If you want it you can download the the files of this PR and replace the existing config with them, problems will just arise if you give away the files you make because it will reset to standard for those who don't have a matching config.

@brecht @pablovazquez I want to get some comfirmation, is there another dev in charge of the UI that can help with the Filmic Looks' view-based filtering? I honestly have no idea how to change the Blender UI side of things.

For the record:

Looking at

3cd27374ee (diff-12aec181a13f7921c34e80fae1a1340814469586),9f4b090eec, and https://projects.blender.org/blender/blender/src/branch/main/source/blender/imbuf/intern/colormanagement.cc#L3210 , I was able to figure out that you can limit looks to a specific View by adding the View and a hyphen separator as a prefix to the Look Name.So

Filmic - Very High ContrastandAgX - Very High ContrastThanks but again the current situation is:

Try it.

Note that by adding the

AgX -prefix, the looks won't show up under AgX Log, Guard Rail, ect. EDIT: which is a problem. It would be better if we could add looks to multiple views, like how OCIO added the ability to add shared views to several displays in v 2.0Ok I see what you are doing.

But this doesn't sound ideal. I would like

AgX LogandGuard Railetc. to be able to use the looks. I guess this approach can be our last resort if the further UI modification doesn't work.After thinking a bit more, I decided to take the method for now, until we have something better. It's committed. At least Filmic looks are back in the menu and they are not mixed together with AgX ones now.

I kept

Greyscalein there, since it also works for Filmic.I was testing and digging into this patch quite a bit today. The AgX view makes things look so much more interesting! Would really be nice to finish this project for Blender 4.0.

Before going to some technical points, I'd like to mention that backwards compatibility is important. We value it a lot. So even though it is a new major Blender version in the works, we can not that easily break things. It is forward compatibility which is allowed to be broken, but even that has some limits. You can read more about exact details in the Compatibility Handling Wiki page.

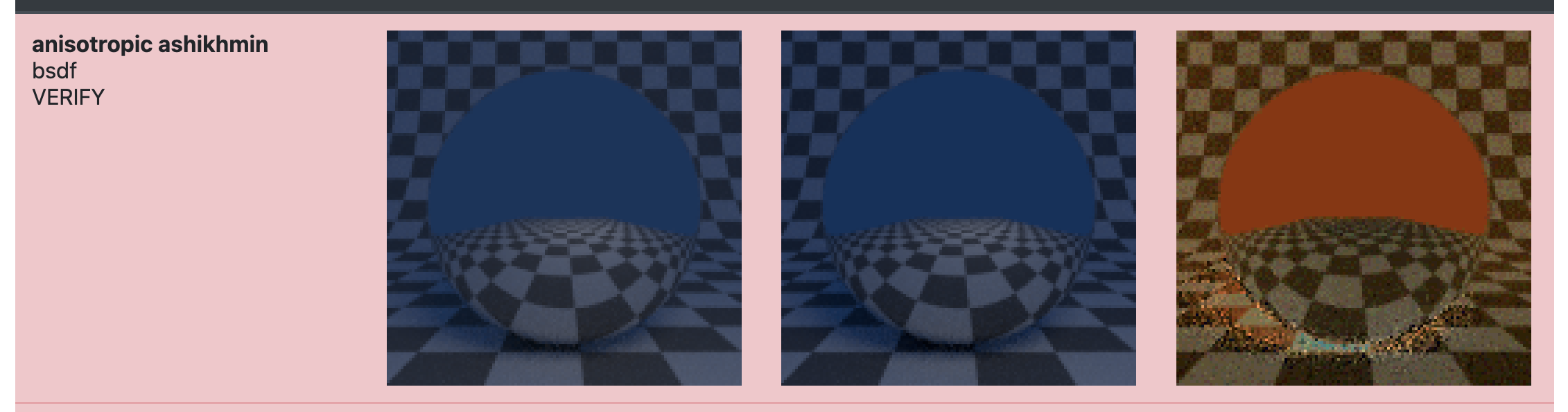

One of the first thing I did was to run the regression tests, and a lot of them has failed. A lot of images are now rendering noticably brighter, even though they do not use any image textures and use Standard view transform. For example the anisotropic ashikhmin test from BSDF render collection:

Not entirely sure yet which exact change of this patch leads to this brightening.

Another test I did was with some of the Tears of Steal frames (beware: is quite big EXR, just some random HDR i had around). Simply opening the file shows quite big change:

This is interesting because neither view nor look transform are supposed to be applied. Additionally, enabling

View as RenderandView Transformset toStandarddoes not change the look in the main branch, but changes the look by quite a lot with this patch. My speculation is is that it is due to sRGB space having a higher clamping value (in the main branch it only goes up to 4.875, not sure yet what it goes up to in the PR). Having wider sRGB range would be beneficial for some other work (perhaps the EDL story in #105662, for example), but it worth ensuring that the difference is indeed caused by it.Another thing I've noticed on the interface is the more options for the linear space. I think it is useful to extend the available options, but to me it is not immediately clear why they are coupled to the AgX view.

On a review side I really think we need to break things down into smaller verifiable steps. It will speed up troubleshooting of regressions, and will allow things to go to main sooner, as well as will help bisecting possible regressions.

The way I see the breakdown is:

Thing I am not sure about is the reference space change. I am not sure whether it is somehow required for the AgX transform to work, or whether it is more of a future-proofing for possible spectral story. If it is the former I'd really appreciate having details about it. If it is the latter, it would need to be a separate point in the break-down list.

I can see that this might sound like a lot of extra work, and that the final state will probably end up being somewhat close to the current state of the patch .But I believe it worth it and it will actually save time while nailing down known regressive changes, and will help nailing down possible regressions discovered later. I did experiment with porting minimal set of the patch to the current configuration and it wasn't bad at all!

What do you think about it? Anything I am missing? Do you think it is something you can help us with?

I think the exrs in the PR demonstrate it quite well. I'm guessing you mean it should be fewer than that. How many, do you think, would be a good number?

Right now it's three exrs from renders and three "real" ones (i.e. photos/video stills)

Thanks for the testings!

I believe this is due to the fact that we switched to the pure power function sRGB in this patch, rather than the piece-wise sRGB we have been using. If we decide to change it back to piece-wise, I can do that quite easily but I also want to type out my reasonings here for the record.

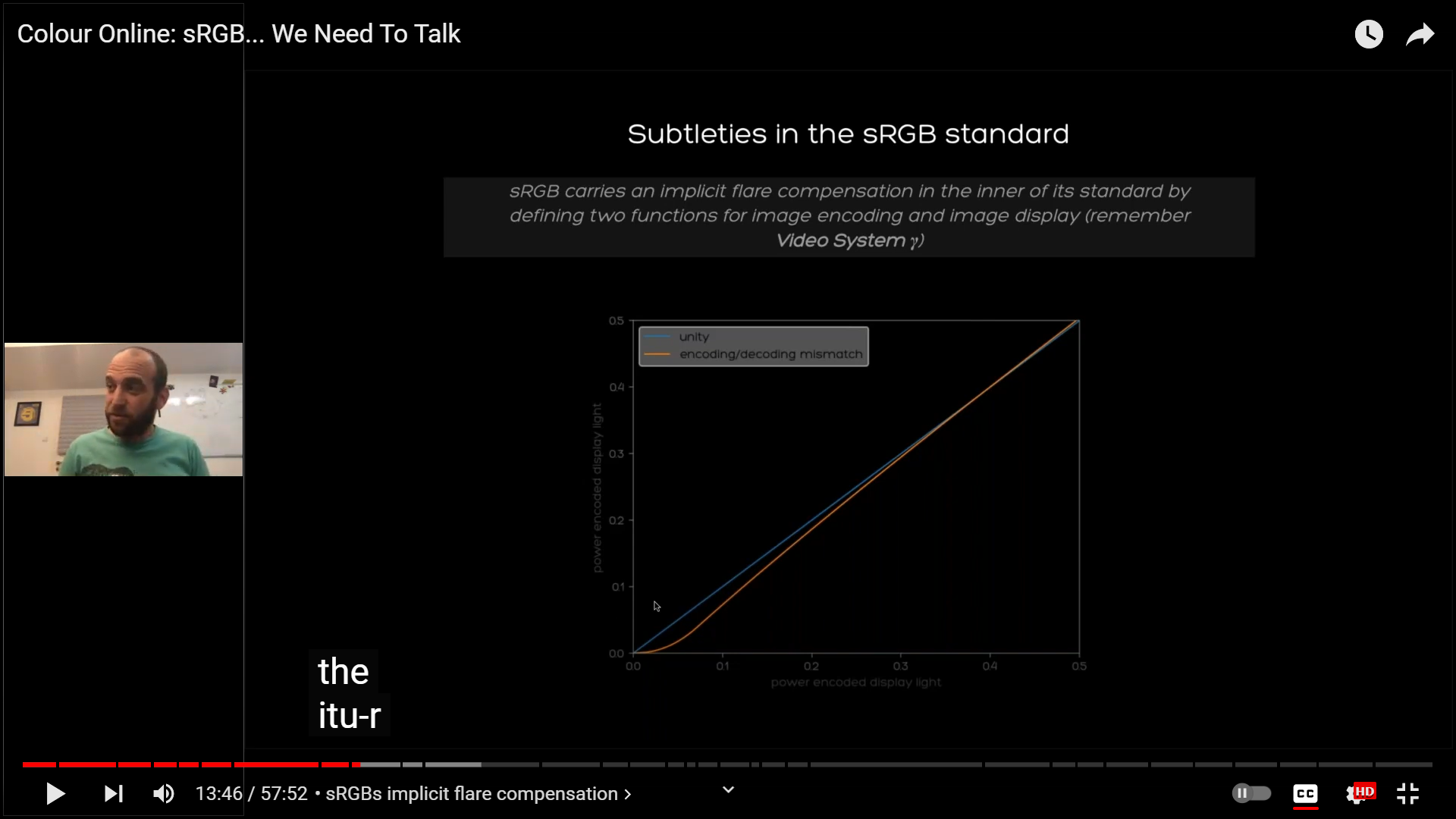

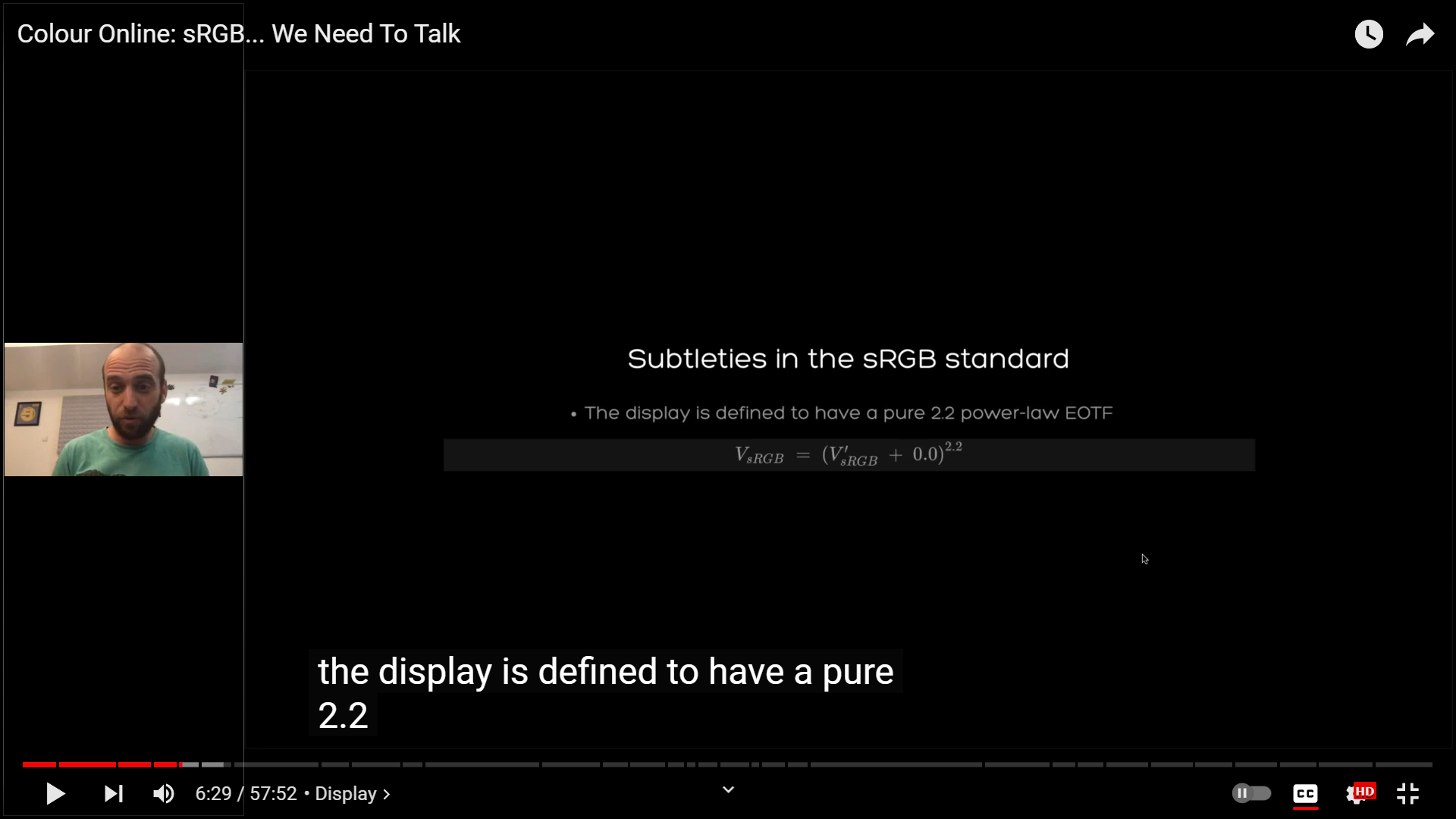

FilmLight has a video on the topic: https://www.youtube.com/watch?v=NzhUzeNUBuM&ab_channel=FilmLight

The sRGB standard specified a pure power 2.2 display device, but a peice-wise linear+2.4 function for the file encoding, the reason is to implicitly include a flare compensation for CRT displays. It's been explained in the video I posted above:

The result, as I have writtem here before, would be:

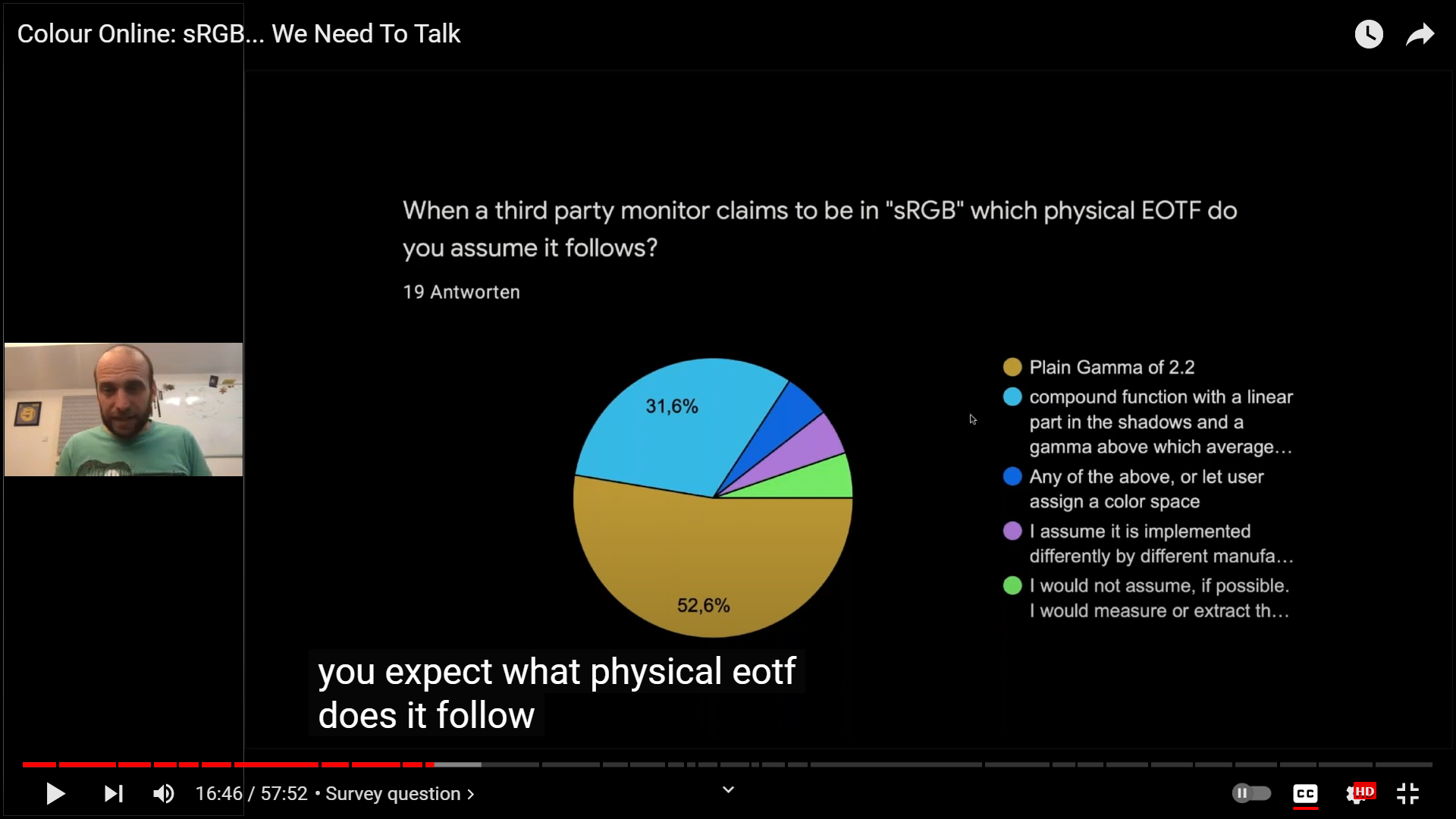

FilmLight also included a survey they did:

From the survey, most people assume sRGB to be pure power 2.2. Therefore most people actually saw the shadows get crushed by default when using piece-wise sRGB.

That's why I believe moving to pure 2.2 is a good choice, I also made it explicit that I was using a 2.2 curve by naming the space

sRGB 2.2, but Brecht requested to rename it back tosRGB, therefore I did.I would like to confirm the decision, are we reverting back to piece-wise sRGB? If answer is yes, will commit

I believe this is because the

Guard Railview transform has been set to the first one on the list, and that was an intentional choice as well. Again, if it's requested to putStandardback to the first on the list, it's an easy change. But I again would like to type out my reasonings for the record.This one is simpler, because I would like users to see this by default when dragging their HDRI to the image editor:

Instead of this skewing to cyan sky:

Since

Guard Railmeans the fence on the edge of the cliff to prevent colors from falling into accidental skewings, it was designed to be the default. It also doesn't impact the texture preview functionality so I thought it should be fine.But again, if you confirm we still want

Standardto be the default, will commit.Partially for the spectral branch. But it's also more straight forward for the definition of the color spaces.

If we want to define the BT.2020 space in the config shipped with 3.6, we need to convert Linear BT.709 to Linear CIE-XYZ I-D65, and then apply the standard XYZ to RGB matrix for BT.2020. Practically you can of course combine the two matrix into one mathmatically, but in principle, CIE XYZ is just where all RGB spaces are defined down to the root. So it makes more sense to work from ground up, instead climbing down from the BT.709 tree branch to the XYZ root, and then climb up to another space branch every time. The use of I-E white point is also trying to provide the needed option for spectral branch. It's also more reasonable because the actual CIE standard XYZ has always been I-E (Resolve's CIE XYZ space is also I-E. If user exports EXR with CIE XYZ encoding and want to import into Resolve, they need to use the I-E one).

If we are requested to revert the reference space back to Linear BT.709 I-D65, it can be done, but a bit more troublesome than the first two items.

So summerize the decision we need to make here:

Guard RailorStandard?I would modify the breakdown to be:

(If I understand correctly you mean I need to upload the EXR files)

With that said, I am still a bit confused as to what we are making this list for.

Do you mean we will discuss the points on the list in the review process? I am confused.

After reading a bit more times, I think I understand now.

Do you mean we need to start from the config currently in the main, and port this config to the main with small little steps? And each step will be their own separate PR, this PR will be just a big reference page for those smaller PRs?

If that's the case, the order of the steps will need to be re-arranged:

This includes: nuke_rec709, lg10, XYZ display device and its standard view colorspace (there simply isn't a monitor you can buy on Amazon or Best Buy etc. that claims to be XYZ colorspace)

The

Nonedisplay device has duplicated functionality as theRawview insRGBdisplay, will removeNonedevice and renameRawview to beNoneview instead.(

Noneview transform is also very straight forward, it's simply "No view transform applied")As an extension of that, the

RawandNon-Colorare duplicates of each other, we need to remove one of them. Since users are more familiar withNon-Color, and it's also the one assigned as thedatarole, will keepNon-Colorand removeRaw.Will change the

Noneview (formerlyRawview) to use theNon-Colorspace. Will also addRawas an alias ofNon-Colorstep 2. Use OCIO's built-in functionality for sRGB, includes changing sRGB to be un-clipped ̶,̶ ̶a̶n̶d̶ ̶a̶l̶s̶o̶ ̶u̶s̶i̶n̶g̶ ̶p̶u̶r̶e̶ ̶p̶o̶w̶e̶r̶ ̶2̶.̶2̶

step 3. refactor the config's transform tree to be XYZ I-E based.

The design decision has been discussed in this PR, XYZ I-E is the actual CIE standard, it's to prepare for Spectral Cycles, but this will also make the next step more straight forward.

This includes changing the

xyz_D65_to_E.spimtxto use the more accurateBradfordmethod instead ofXYZ Scaling, and also addingxyz_E_to_D65.spimtxsince the opposite direction uses a different matrix.step 4. add more color spaces. Renaming of existing spaces is required, for example, the name

Linearmakes no sense when there are a bunch of Linear spaces.Will include aliases for backwards compatibility

step 5. Add AgX view and its components.

Some components are added as reusable utility parts for view transforms to use, will use OCIOv2's

inactive_colorspacesto hide them from UI.This also includes adding the extra display device support, and replacing False Color with new one, as well as AgX looks.

A̶l̶s̶o̶ ̶s̶h̶r̶i̶n̶k̶ ̶F̶i̶l̶m̶i̶c̶ ̶d̶e̶s̶a̶t̶ ̶L̶U̶T̶ ̶s̶i̶z̶e̶ ̶d̶o̶w̶n̶ ̶w̶i̶t̶h̶ ̶n̶o̶ ̶q̶u̶a̶l̶i̶t̶y̶ ̶l̶o̶s̶s̶.̶ ̶(̶N̶e̶e̶d̶ ̶t̶o̶ ̶m̶a̶k̶e̶ ̶r̶o̶o̶m̶ ̶f̶o̶r̶ ̶A̶g̶X̶ ̶L̶U̶T̶s̶,̶ ̶t̶h̶e̶y̶ ̶a̶r̶e̶ ̶q̶u̶i̶t̶e̶ ̶l̶a̶r̶g̶e̶)̶ ̶

step 6. Shrink Filmic desat LUT size down with no quality loss. (Need to make room for AgX LUTs, they are quite large)

Will wait for your answers to all these questions asked, and to the steps here, to make sure we are on the same page before I start to work on this. Especially step 1 and 2, where I remove old stuff.

@kram1032 For the EXR files story. The picture in the PR indeed demonstrates the difference well. What I meant is that it would be handy to have the original EXRs which were used to show the difference. The reason for this is because I believe we should move changes in an incremental steps. It will help speedup overall process. Having a common set of images would help with this as then we can more easily verify the result, and if some issue is uncovered we have the same repro case.

@Eary Thanks for the explanation.

Ah, good thought! I did not realize it causes such big visual difference.

I can totally see your reasoning for such change, but, unfortunately, there are still lots of areas of Blender which do not use OCIO configuration for linear<->sRGB conversion. I am not defending those areas by any means, but what I mean is: with the current state of code change from piece-wise to pure-power goes beyond OCIO configuration.

From my understanding the AgX view transform can be done with piece-wise as well, so I would really suggest moving the piece-wise->pure power to a separate project, with its own presentation, motivation, and ensuring that it is done consistently everywhere in Blender.

The Brecht's comment on this topic was mainly about naming convention, and wasn't an intent to replace sRGB to pure power in the OCIO configuration just yet (at least not, as I've mentioned above, looking into and solving all implications).

Visions of the past! I forgot there is this implicit rule, even though is something i was directly involved into... Duuuh :)

My main concern on this topic was caused by the fact that it wasn't really clear why the change in behavior was introduced. You reply clarified it very well.

It does seems to be re-occuring topic of improving the default "clipping" behavior, and it does seem that the

Guard Railprovides much better solution for it. So i think it is fine to have it first in the list.For the spectral story we'll need to change scene linear to something much wider. But that has implications outside of the spectral rendering (current Cycles, NPR renderers, color pickers, assets, etc...). While it is something we'll need to tackle sooner than later, I'd really prefer have a dedicated design for it and tackle it separately. There are quite some questions to be figured out, and I wouldn't want the AgX view transform to be stalled because of them.

The reference space I can't say I am emotionally attached to. On the one hand, changing it risks introducing a regressive change, on another hand at some point we'd need to do change it anyway.

I think it is fine to change the reference, but as a dedicated and isolated PR, for the ease of troubleshooting.

Exactly that! It is not very practical and frustrating for both sides to work on such big changes. Breaking them down into smaller incremental steps helps a lot.

I like your plan. It does seem that a plan that we'll be able to tackle much much easier!

I did some preliminary comments of things I think are important to keep in mind when working on these steps. Surely some points you might agree, others disagree,. For keeping it practical I'd suggest not having long discussion right now, and have a more focused look and re-iteration (if needed) in the individual PRs.

nuke_rec709andlg10I don't think are even exposed, so seems that they indeed can just go. As well asvd16.spi1d.The

XYZ display deviceis indeed in some limbo state. We initially used it to master DCP, but even for that the current configuration is not complete, and we only used the display device because we did not have ability to override color space for saving. So indeed it can go.The

Standardview transform we can not remove due to compatibility reasons.The

Nonedisplay was a compatibility option for theNo color manegementoption. I think by now it is too much obsolete. Some versioning code would need to be adjusted, but other than that I think it will be good to remove this legacy thing.Are you talking the input color space here?

If so, is my understanding correct that we can have alias to Raw, to avoid possible compatibility breakage for .blend files which use "Raw" instead of "Non-Color"?

Un-clipping is surely important! I am just a bit concerned it becoming a bigger project to tackle all implications in all related Blender areas (viewport, texturing, sequencer...). Would be nice to have it all consistent, but would also be un-ideal if doing so the AgX will be delaying.

Lets have a deeper dive when the PR is ready!

It is about the reference space being

XYZ I-E? Sounds good to me.Generally fine. The renaming I'd be careful about and would only do if it is solves ambiguity for artists.

Depending on the exact route we take we might need to commit some aliases to 3.6 branch as per the compatibility policy.

I am a bit on a split w.r.t

Linearname. The conservative part of me says "leave it alone" but looking-into-future part of me says "if we plan to introduce wider-gamut configuration in Blender we'd better give a clear name to the space, to avoid people wrongly assuming it is Scene Linear. And now it is the best time to do so". More I think about it more I think renaming to "Linear rec709" is the proper way forward. Although, could be good to have a color space which always means "Scene Linear" to be used in, i.e., color space compositor node.But lets not go ahead too much in discussion just yet, and talk in derails in the specific PR.

The most exciting part!

The only comment i have here is that maybe shrinking the Filmic LUT can be done separately from it? I am not aware of inter-dependencies, but sounds like it will be possible to do as "simple, no expected changes on user-level, just merge it now" PR.

On a higher level there are couple of points which I am curious about when it comes to adding view transforms and display devices.

One of them is the virtual display. It would be nice to have a default option which will detect the actual monitor without user specifying an exact color space (which could even change when moving Blender window across screens). It is something we're investigating in the context of the EDR/HDR support. The way mac does it is kind of convenient when we output a color space (AFAIR it is rec2020), tell it to the OS which color space it is, and leave the final transform to the OS. Do you have experience with the virtual display option? Is it something that really solves what we believe it solves?

Another point is about shared views and

display_colorspaces. Is it somehow possible to utilize them to make it easier to expand AgX view transform to other color space (and possibly to also support the virtual display) ?P.S. Apologies for the lengthy reply :) Hopefully it all makes sense =)

@Sergey there's a new feature in Windows 11 called Auto Color Management (ACM) may help.

Advancing the State of Color Management in Windows

It is the color management features of windows HDR that being bringed over to SDR in 2022.

Thanks for the reply, that settles a lot of questions for me.

I have experimented with these but had some concerns. Mainly two points (I also mentioned them in the other topic)

The

<USE_DISPLAY_NAME>anddisplay_colorspacesfeatures seem to work hand in hand, and seem to require the view transforms to be listed in their ownview_transformssection, which means the view transform spaces will be hidden from the color space list UI, causing a regression where user will no longer be allowed to use theConvert Colorspacenode in compositor to manually apply the view transform.And where they include an auto conversion to the display space, there is also a concern that we should instead use the Guard Rails we crafted. AgX uses a "BT.2020 master" image and uses the

Guard Railview transforms dedicated for each display, to handle theBT.2020 to other displaystep. I don't think the auto conversion covers the nuance, for example, BT.2020 green actually looks like more of a mint green than the more yellowish green we are used to seeing. We included a chromaticity-linear "gamut compression" (might not be the best term to describe it) in the Guard Rails that I don't think the auto conversions cover at all.Just to clarify, when I said XYZ display device and its

Standardview transform space, I am referring to the dci-xyz space (which is defined in the config to be CIE XYZ I-D65 with a non-linear transfer function), not the idea ofStandardview transforms in general. Do you mean we should keep the dci-xyz space or do you simply mean we keep the general idea ofStandardview transforms? Because I don't see the need of keeping the DCI-XYZ space around when we are removing the XYZ display in the first place.@baoyu Thanks for the link.

From the integration into blender point of view, is it something like using the API you've linked to to query ICC, and provide this to OCIO?

@Eary Interesting.

For the guard rail story. Imagine for a moment we have some smart logic in the OCIO GPU shader (and its CPU side friend for the file output) which does smarter thing than simply clipping individual channels. Will doing so simplify some of the

Guard Railanddisplay_colorspace?Ah, I think I've missed the "its" part, sorry. If you are talking about XYZ display, its Standard, DCI, and RAW views (and all the "loose" ends after that such removal) then we are in an agreement.

What i meant is that sRGB Standard we need to keep for the compatibility.

It would indeed, it equals to OCIO implementing a standardized Guard Rail that we can just use. But I don't think there currently is such a thing? Otherwise why would the ACES people look at the complex CAM models (that Troy said they don't work, I am not sure on that front) to do their own "Gamut Compression" for their ACES 2.0 candidate?

If there comes such a thing in OCIO, and if it behaves just as good or even better than our implementation (probably will be better considering theirs would be shader vs ours is LUTs), it doesn't hurt to use it. But I don't think there currently is such a thing.

There is indeed no such a thing in OCIO (at least not as far as I know), but it doesn't mean that we can't implement a better clipping on our side. Is not something we have a huge amount of thought, is just a topic which popped recently when we talked about adjacent topics with Nathan.

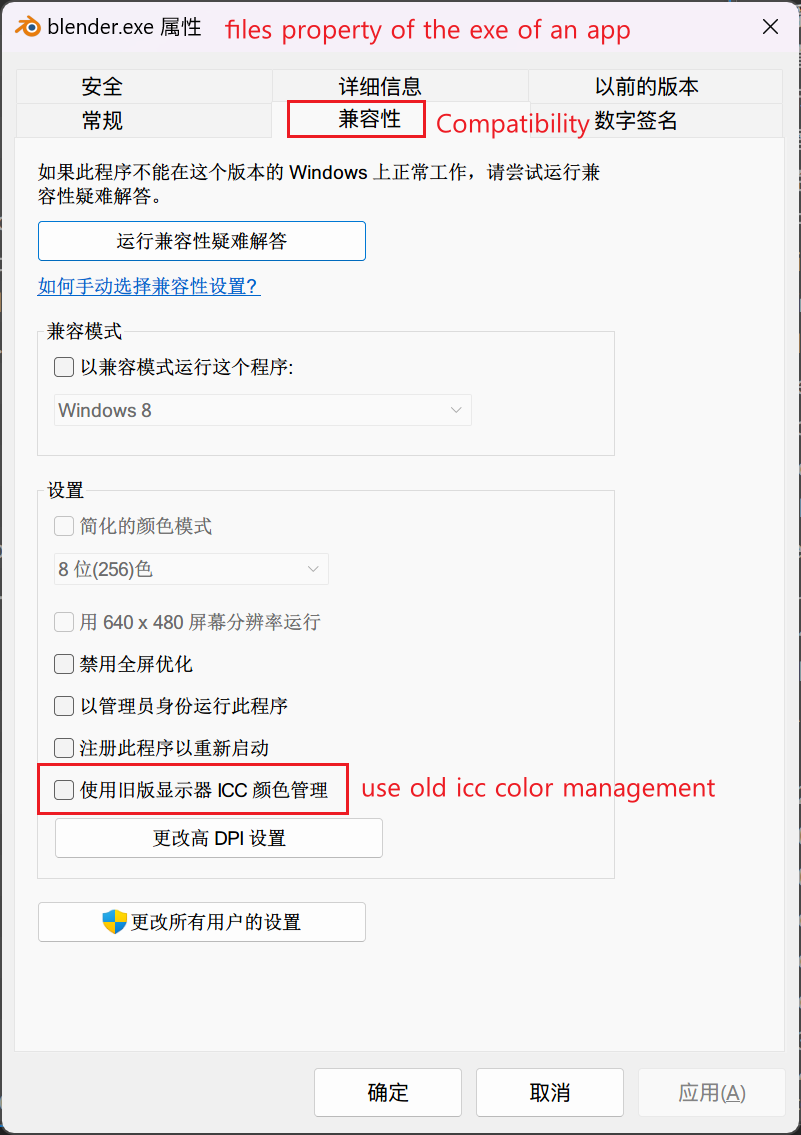

For old-fashioned-and-will-to-self-color-managed-app, windows allow the user to bypass ACM like this.

In ACM, apps want to display HDR signal or widegamut SDR signal, just use the APIs that MS provided to compose contents within the app, then send the tagged result to Desktop Window Manager (DWM). The OS will do the rest.

Here's a briefed guide to integrate windows auto color management.

AFAIK, there's only APIs for UWP, directX Apps being talked in this guide. I'm afraid that blender isn't a UWP app or DX app.

However, if an app doesn't adpat to ACM, whatever content the app outputs, it's been treated as sRGB, which is somewhat OK for now.

Interesting, but that would probably be a project of its own. What we did in our LUTs are kind of brute force, we simply calculate the data's original luminance, offset the data until there is no negative, calculate the change in luminance the offet caused, and restore the original luminance by multiplying. We also needed to do some color opponency hack to compensate for some camera-produced colorimetry having negative luminance.

If we get to implement this (or potentially some better method) in the future, it can work. But it will probably be its own project.

Closing this PR since we decided to do it incrementally

Step 1 PR has been posted #110559

Most of what has been discussed here so far makes sense to me, aside from some subtleties that we may want to consider at some point. I'll post about those later when I have time to collect my thoughts better.

But the one thing I straightforwardly disagree with is using a pure 2.2 power function for the sRGB display. @Eary wrote:

While that may be the case, if people are knowingly not calibrating to the standard, then they are probably doing so for a reason, knowing that some/all of their colors will still be encoded with the actual sRGB transfer function despite their display being calibrated to decode with a 2.2 power function. And if they are unknowingly failing to calibrate to the standard, then that's an issue of education.

Further, if some of the people that are knowingly not calibrating to the standard are doing so because some software they use also doesn't adhere to the standard, then that's an issue with the software. And I would prefer Blender to not become yet another software in that list.

Having said that, I am very sympathetic to people working with color-broken software. So if that is indeed one of the issues, then perhaps we should have two sRGB displays in the OCIO config:

sRGBandsRGB non-standard: power 2.2. (Better naming suggestions for the latter very welcome.) Then people that are forced to mis-calibrate their displays due to other misbehaving software can still work in Blender appropriately, but the default would still be to properly adhere to the sRGB standard.The downside of that, of course, would be potential user confusion for users not familiar with the situation. But if that's enough of a concern to leave one of the two options out, then IMO it should be the non-standard one that's omitted.

The issue is, 2.2 is the actual standard for the display device:

The file encoding decided to specify something else to create a discrepancy between display and file encoding, for flare compensation. I think the video has already explained it clearly, so it's not an issue of education.

Except that 2.2 is the actual standard for the display device.

But we will go with piece-wise for this project, as confirmed by Sergey. Whether sRGB should be changed to 2.2 will be a discussion separated from this current one.

Yeah, that's a good approach. I still want to provide counterpoints for future reference (including for my own reference, since I've taken the time to dive into this now) for when that discussion happens. But feel free to ignore for now.

I've split this into two parts: "What does the standard specify?" and "Regardless, what should current practice be?"

What does the standard specify?

So first off, it turns out you are absolutely right that the reference display from the standard has a plain 2.2 gamma, and I was mistaken in saying that a properly calibrated display would necessarily be calibrated to the piece-wise function. However, after diving into this I believe it is a significant misreading of the standard to also say that colors sent to the display should be encoded that way, rather than with the defined CIE XYZ -> sRGB transformation. The following is a (slightly long) summary of why I think that.

The 2.2 gamma is specified in a subsection of the standard titled "Reference image display system characteristics". But that subsection is part of a larger section, "Reference conditions", that also specifies (among other things) the reference ambient lighting of the viewing environment, which effects dark values.

So the "Reference conditions" section describes a holistic viewing situation which the standard believes will produce approximately the right perceptual experience when sRGB encoded colors (encoded with the piece-wise function) are sent to the display. The reference display subsection is not supposed to be taken in isolation.

The introductory text of the standard supports that interpretation:

In other words, the specified piece-wise encoding should be sent directly to the display for (they believe) accurate color rendering under the reference conditions.

And I think this is where the confusion starts. Immediately after that, the standards document says:

One interpretation of this is to say that as ambient lighting changes, the way the color is encoded should be changed as well. So, for example, in a darker viewing environment the encoding should converge on an actual gamma of 2.2 to match the reference display.

However, this ignores that the reference display is itself part of the reference conditions they're referring to, and is thus also subject to variability. So I think a more likely and reasonable interpretation of that bit is basically, "Hey, we know that both viewing environments and actual displays are variable, so you may need to compensate for that if you want accurate color reproduction."

So that just means that if you know the specifics of your actual display and actual viewing conditions, of course you should calibrate to that. (And notably this would include things like white point adaptation as well!) So sure, if you're viewing in a dark environment and your monitor is calibrated to 2.2 gamma, you should send your colors to it with a 2.2 gamma encoding. But that's because the conditions are deviating from the reference conditions, and in turn you are sending a non-sRGB signal to compensate for that deviation.

Having said all that, I acknowledge that the standard doesn't read super clearly on these points. And the video you linked to acknowledges that as well.

But it seems unlikely that the sRGB committee intended for there to be a separate encoding for files vs displays. The norm at the time was to just directly pipe the RGB values from an image or video file straight to the display. This was partly for efficiency, but also to avoid re-quantization artifacts. And it's hard to imagine them intending to upend that norm in an era where 8-bit-per-channel color was still competing with palettized color.

In fact, the technical secretary of the body that developed the sRGB standard spoke up on the topic:

(Quote pulled from this overview, which is worth a read on its own, along with its comment section.)

Of course, as noted by Andrew Somers in the comment section, a statement from someone involved in creating the standard is not actually itself normative.

Nevertheless, I think it's a strained reading of the standard to say that the color data sent to the display is supposed to be gamma 2.2 encoded. That interpretation requires, among other things, ignoring the "Reference conditions" section as a holistic specification of viewing conditions.

Regardless, what should current practice be?

So, what the standard says is one thing, but how to achieve the best color reproduction is another. And the situation is complicated by that distinction. As far as I've been able to figure out, the current situation is as follows:

XYZ -> sRGB -> reference conditionspipeline.In short, as software developers we're now in a situation where we have to make a choice between either following the standard, or breaking from the standard but giving our users better color reproduction in many (most?) modern viewing conditions.

I'm honestly not sure what the right choice is here. At the very least I think we should be transparent if we don't follow the standard, and not use plain "sRGB" as the name of the display space if it doesn't use the piece-wise encoding. (And I recognize that you did originally use a modified name, @Eary, before being asked to change it.)

One hitch that @brecht pointed out is that Blender also uses the output display spaces for writing to files. So if we don't provide an sRGB output space with the piece-wise function, there would be no way for users to write proper sRGB image/video files from Blender.

It's also worth mentioning that Blender has a gamma setting for output already. So as long as we provide a linear sRGB/Rec.709 output, users who are in-the-know can use that and set the gamma to 2.2 if they wish.

This is only true if we will never change the working space to BT.2020 or ACEScg in the future. The

RaworNoneview is not supposed to change primaries, it's supposed to be a straight output of working space values.I agree this is a very complicated topic.

Or if we explicitly provide a linear sRGB/Rec.709 output display transform, which is the possibility I was suggesting. I don't necessarily think that's a good solution, but I wanted to point it out as an option just to cover our bases.

Step 2 PR has been posted #110712

Let it be known that the history of sRGB is dark and riddled with more politics than Game of Thrones. I’d be extremely cautious reading any sort of anything into anything without discussion with the primary author. So political in fact, that one participant left all of colour because of it. No one around this era should be treated as authoritative beyond the primary author, should you manage to speak with them.

The specification is clear, and we have the paper to go by. The reference display is pure 2.2, and the encoding is the two part.

It is a tremendous leap to suggest that this individual was involved in the creation of the standard. Unless one has spoken with the primary authorship, it is fair to suggest all parties are hostile.

—

No amount of tagging the image will help here. The main issue is that there’s a sort of baked-in oddball chain on the two primary platforms that flips meaning if someone characterizes their display.

By default on macOS and Windows (although I can’t confirm more recent versions):

Canonized, by the book sRGB specification handling; a two part OETF passed through a pure 2.2 power function that yields a discrepancy between the OETF and the EOTF.

In the case of a characterized system, and assuming a managed loading of an untagged file:

1.0 / 2.2broadly.Phew.

Step 3 PR has been posted #110913

Step 4 PR has been posted #110941

Flagging this commit as a problem.

Great patch to add in Apple EDR processing, but author appears to be conflating picture formation and encoding for HDR signals.

This will effectively be problematic as Apple’s encoding is expecting the macOS encoding which applies unique meaning to values beyond the closed domain encoded signal encapsulation of 0-100% for a given encoding.

This would require another unique transformation to encode to this specific encoding, from such encodings as BT.2100 via standard HLG or ST.2084 encodings.

https://projects.blender.org/blender/blender/commit/2367ed2ef24

Step 5 PR has been posted #111099

@troy_s This is a step towards the proper HDR. We are using an extended sRGB for it, and this is how we advertize the buffers to the OS compositor (which takes care of the actual display transform). I can see how this is not very ideal from the HDR content creation point of view, but this is just a first step in the project.

So to me it is not immediatelly obvious how the commit is wrong. If you have some concrete suggestions it will be good to know.

@troy_s that commit is just one step towards full HDR support. It changes the output from sRGB to Extended sRGB, which works fine with the Standard view transform. Someone could design a film-like view transform for Extended sRGB, and I don't think there would be anything macOS specific about that. It would work fine on other operating systems too if we add HDR support.

But HDR displays and file formats typically come with wider gamut, so it would be better to have Blender support a Rec.2100 HLG and PQ display with a view transform designed for that. That would be the second step towards full HDR support.

For the record, I agree with both of you @brecht and @Sergey; it is a great step to getting the lower level pieces in place.

I am not entirely sure how to even encode for signals here though. I believe we can specify the transfer function of the encoding on macOS. The “system” mode is not terribly useful here, because it assigns unique meaning to negatives (via scRGB yuck) and greater than 100%, and it floats with system brightness changes, etc.

Given that the basics seem to be in place, the shortest path to offering HDR to Blender folks is probably a shorter path than many folks might assume; HLG.

I have tested AgX with HLG more or less “as is” with a slightly shifted “middle” point, and the output works rather remarkably well. From the macOS side, it would require changing the buffer type from the (nightmare fuel) scRGB to an HLG encoding via the

CAEDRMetadatafield I believe?Perhaps this can be experimented with as it might be a really nice little feature to tuck in.

My concern with exposing any EDR in the current manner is that literally none of the picture formation techniques are geared toward the desktop implementation for macOS. A slight tweak could make for a pretty great addition.

Changing the buffer type is unfortunately not a simple change. It requires significant changes to draw UI elements and renders into a single wide gamut buffer correctly.

Fair point. I was under the impression that the various buffers can be composited together, which would place the onus on the picture buffer only.

Even with all of the UI complexities, HLG still feels the most direct route as it is backwards compatible with standard output encodings, allowing for everything to remain “as is” and only require a matrix and the final tweak to get to HLG.

The HLG route would seem an order of a magnitude more manageable than ST.2084, as again, nothing really needs to change. With ST.2084, I could see that project taking years. HLG is likely vastly closer to a workable solution?

I don't know why PQ (ST.2084) would be much more complicated than HLG. They both raise questions about how to scale the HDR content, in absolute terms and relative to the SDR UI elements. It seems a bit complicated and underspecified, though following conventions and interpreting metadata we can do something reasonable.

Regardless, HLG output would be the most important thing for Blender, and those targeting cinema with PQ will use some other software to grade and encode that anyway.

It’s the rather elegant nature of the HLG signal being designed from the ground up to be “backwards compatible” in terms of the transfer function. That is, aside from the primaries, the transfer characteristic more or less “Just Works”.

Whereas ST.2084 requires a complete re-engineering.

I estimate out of my ass that Blender could be migrated to HDR 100% across the board without much tweaking using a sort of HLG alignment model. It is probably a rather limited effort of a few focused minds. Happy to help here if anyone feels the desire to make this a trial. The Apple patch notwithstanding, as that requires some serious thought due to the way the system level encoding works.

90% of the problem is dissolving the idea of “scene” and “display”, and thinking in terms of formed pictures versus unformed pictures. UI and such snaps together much more reasonably under such a mental model.

Anyways, I’m very optimistic that an HLG inspired approach could work well.

HLG would provide a nice “picture constancy” baseline for ST.2084 content, without much trim pass manipulation. I can say this reasonably confidently as I’ve done quite a bit of testing via the AgX mechanic on this very subject, and it passes. It is shockingly solid.

My understanding is that with sufficient metadata, converting between HLG and PQ is a straightforward operation, no re-engineering required. But anyways, this is getting off topic too much.

The last PR has been posted: #111380

Hello Zijun, the Guard Rail looks what I'm looking for, we are doing product design rendering with Blender, we had to say "filmic sucks" , and we saw many render artist in commuinty also don't like filmic , it make the final image lost color details and looks very "cheap" camparing other render engine. we want an improved version of "Standard".

So how can I download and test the Guard Rail config? please help.

I hope this approach would get perhaps 2 new operators which would set all the textures/images in a scene to either agx or filmic approach. It would be very useful on big scenes. Transition from one to the other will be a huge undertaking doing it manually. An operator can solve this in just seconds I believe

@RomboutVersluijs a python script using Blender's python interface is probably an easier way to do this.

But just for reference, textures being set to the Agx or Filmic colour space seem to have relatively limited use cases in the material creation pipeline (one of the most common places where textures are used). So if you need to change many textures between these two colour spaces, then it may be possible you're using the colour spaces incorrectly. But I could be wrong about it.

@RomboutVersluijs here are two python scripts for doing what I mentioned above. Attached to the bottom of this comment is a video showing how to use it.

Convert to AGX Base (Changes all "Filmic sRGB" texture nodes to "AGX Base sRGB". If the colour space of the texture node is not set to "Filmic sRGB", then it won't be converted.)

Convert to Filmic (Changes all "AGX Base" texture nodes to "Filmic sRGB". If the colour space of the texture node is not set to "AGX Base", then it won't be converted.)

Pull request closed