MacOS: Enable support for EDR rendering #105662

No reviewers

Labels

No Label

Interest

Alembic

Interest

Animation & Rigging

Interest

Asset System

Interest

Audio

Interest

Automated Testing

Interest

Blender Asset Bundle

Interest

BlendFile

Interest

Collada

Interest

Compatibility

Interest

Compositing

Interest

Core

Interest

Cycles

Interest

Dependency Graph

Interest

Development Management

Interest

EEVEE

Interest

EEVEE & Viewport

Interest

Freestyle

Interest

Geometry Nodes

Interest

Grease Pencil

Interest

ID Management

Interest

Images & Movies

Interest

Import Export

Interest

Line Art

Interest

Masking

Interest

Metal

Interest

Modeling

Interest

Modifiers

Interest

Motion Tracking

Interest

Nodes & Physics

Interest

OpenGL

Interest

Overlay

Interest

Overrides

Interest

Performance

Interest

Physics

Interest

Pipeline, Assets & IO

Interest

Platforms, Builds & Tests

Interest

Python API

Interest

Render & Cycles

Interest

Render Pipeline

Interest

Sculpt, Paint & Texture

Interest

Text Editor

Interest

Translations

Interest

Triaging

Interest

Undo

Interest

USD

Interest

User Interface

Interest

UV Editing

Interest

VFX & Video

Interest

Video Sequencer

Interest

Virtual Reality

Interest

Vulkan

Interest

Wayland

Interest

Workbench

Interest: X11

Legacy

Asset Browser Project

Legacy

Blender 2.8 Project

Legacy

Milestone 1: Basic, Local Asset Browser

Legacy

OpenGL Error

Meta

Good First Issue

Meta

Papercut

Meta

Retrospective

Meta

Security

Module

Animation & Rigging

Module

Core

Module

Development Management

Module

EEVEE & Viewport

Module

Grease Pencil

Module

Modeling

Module

Nodes & Physics

Module

Pipeline, Assets & IO

Module

Platforms, Builds & Tests

Module

Python API

Module

Render & Cycles

Module

Sculpt, Paint & Texture

Module

Triaging

Module

User Interface

Module

VFX & Video

Platform

FreeBSD

Platform

Linux

Platform

macOS

Platform

Windows

Priority

High

Priority

Low

Priority

Normal

Priority

Unbreak Now!

Status

Archived

Status

Confirmed

Status

Duplicate

Status

Needs Info from Developers

Status

Needs Information from User

Status

Needs Triage

Status

Resolved

Type

Bug

Type

Design

Type

Known Issue

Type

Patch

Type

Report

Type

To Do

No Milestone

No project

No Assignees

10 Participants

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: blender/blender#105662

Loading…

Reference in New Issue

Block a user

No description provided.

Delete Branch "Jason-Fielder/blender:macos_EDR_support"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Add HDR viewport display support through addition of a new GPU

capability used to toggle high bit-depth UI panel texture formats

and remove color range cap during colormanagement, allowing

display of extended color ranges above 1.0.

New viewport HDR display boolean option added to Scene

colormanagement properties, to allow dynamic disabling

and enabling of feature.

Other modifications required to enable HDR display,

consistently such as changing material preview display to a

floating point format.

First implementation limited to Metal as support required in

Window/Swapchain engine to enable extended display

colourspace.

This patch is limited to allowing the display to visualise

extended colors, but does not include future looking work

to better integrate HDR into the full workflow.

I am removing myself as a reviewer as I am not part of the Blender foundation, nor do I have much programming knowledge, so I can not review the code. All I can do is test the feature and report issues with it.

Here is my comment from the other pull request. I have not retested the patch so some of this information may be invalid.

Here's a few things I found from a quick test:

System Information

Operating system: macOS-13.1-arm64-arm-64bit 64 Bits

Device: 14inch M1 Pro Macbook Pro - I was using the built in display for testing

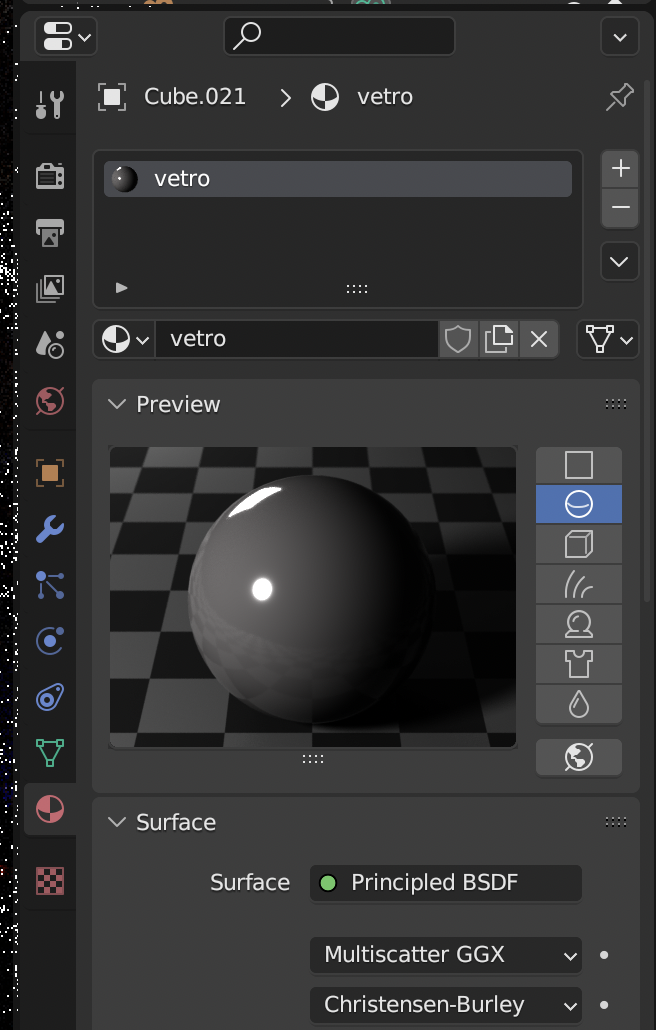

Here's a screenshot showing where the material preview panel can be found:

Yes, both limitations are expected.

Expectation is that this patch requires many tweaks and other topics to be solved, before it will be enabled by default in Blender.

In the short term it is expected become an experimental developer option in order for developers to start planning the modifications.

I test run this, and found some limitations in the viewport

I do think it is interesting to see this happening. We might first want to align with #105714. Questions like EDR vs HDR?

Biggest challenge IMO is finding a developer who can pull this off on our side.

More detail in #105714, but I think it makes sense for this to be a boolean HDR on/off option that only affects display. It could only show on systems where it is supported, and does not have to be experimental assuming the bugs can be fixed.

It's wide gamut that requires deeper changes, but we don't necessarily have to add support for them at the same time.

@ -759,6 +759,9 @@ class USERPREF_PT_viewport_quality(ViewportPanel, CenterAlignMixIn, Panel):col.prop(system, "use_overlay_smooth_wire", text="Overlay")col.prop(system, "use_edit_mode_smooth_wire", text="Edit Mode")col = layout.column(heading="Viewport Colorspace")Only show when

gpu.platform.backend_type_get() == 'METAL'or introducegpu.capabilities.hdr_support_get()@ -5679,0 +5682,4 @@"Viewport High Dynamic Range","Enable high dynamic range with extended colorspace in viewport, ""uncapping display brightness for rendered content.");RNA_def_property_update(prop, 0, "rna_userdef_dpi_update");Not related to this change, but we should rename this :-)

rna_userdef_gpu_updateI will add a patch in main for this.This is now a user preference, but my proposal in #105714 was to make this a scene setting. I think it depends on the .blend file if you want to see HDR or not, depending on what kind of output format you are targeting.

Thank you for the feedback @brecht and @Jeroen-Bakker!

I believe I've now addressed the feedback and previously bugs mentioned. The PR likely needs some clean up, though think it may be at a sufficiently stable point where this can be taken out of WIP.

Naturally this is just the first step discussed, enabling the HDR/extended display in viewport by ensuring colour ranges are not capped and other components such as final render preview and material preview's also render in HDR.

To highlight a few decisions based on the feedback and discussion:

HDR is added as a viewport display option in the scene colour management settings. All this does is ensure all panels and render targets are allocated using 16 bit formats, and that the OCIO/display merge shaders do not cap the range at 1.0, allowing the backing display settings to display an extended range.

transparent UI overlays are blended against the "capped" version of the colour to avoid excessive over-brightness underneath UI pixels, which could make content hard to read.

HDR availability is exposed via `GPU_HDR_viewport_support()', which informs whether the selected backend currently supports HDR swap chain formats. If the window is opened on a display which does not support HDR, and the option is toggled, the results will not look any different as the display will not be able to show the extra range.

All feedback is very welcome, and happy to address any issues and concerns you may have,

Thanks!

WIP: Enable support for EDR on Macto Enable support for EDR on MacWe should update the description of the task with those decisions and what is expected to work and what is expected not to work yet (previews) We can use them for the release notes as well.

Enable support for EDR on Macto MacOS: Enable support for HDR renderingMacOS: Enable support for HDR renderingto MacOS: Enable support for EDR renderingI went over the code, still need to get a monitor to do actual testing. Will collab with someone here to test drive.

@ -171,2 +171,3 @@col.rgb = pow(col.rgb, vec3(parameters.exponent * 2.2));col = clamp(col, 0.0, 1.0);col = max(col, 0.0);vec4 clamped_col = min(col, 1.0);would still use

clamp(col, 0.0, 1.0)here. and move themaxinside the else-clauseAs would even go further and suggest to move all clamping inside the if-else clause for clarity.

This way, the code is easier to refactor if we need to change the else clause.

@ -431,3 +431,3 @@size_y,true,GPU_RGBA8,GPU_RGBA16F,ED_screen_preview_renderis used to render an icon for a scene. In this function it is read back to a UBYTE buffer and clamped. I am wondering if there is a reason to use half float offscreen buffer in this case.@ -52,6 +52,7 @@ bool GPU_compute_shader_support(void);bool GPU_shader_storage_buffer_objects_support(void);bool GPU_shader_image_load_store_support(void);bool GPU_shader_draw_parameters_support(void);bool GPU_HDR_support(void);Would use

GPU_hdr_supporteven if the style guide doesn't require it@ -654,2 +654,4 @@struct GPUTexture *GPU_offscreen_color_texture(const GPUOffScreen *offscreen);/*** Return the color texture of a #GPUOffScreen. Does not give ownership.Comment needs to be updated.

@ -37,3 +37,3 @@if (overlay) {fragColor = clamp(fragColor, 0.0, 1.0);fragColor = max(fragColor, 0.0);Same here as in the OCIO shader.

@ -508,6 +508,18 @@ static void rna_ColorManagedViewSettings_look_set(PointerRNA *ptr, int value)}}static void rna_ColorManagedViewSettings_use_hdr_set(PointerRNA *ptr, bool value)This function can be removed. RNA will generate similar code.

We only add these functions when the RNA default behavior needs to be 'overloaded'

@ -1092,0 +1111,4 @@}else {/* else fill with black */memset(rect, 0, sizeof(int) * rectx * recty);sizeof(float)*4@ -658,1 +661,4 @@bool use_viewport){/* Determine desired offscreen format. */I see this code be repeated 3 times. better extract it in a static function.

@blender-bot package

Package build started. Download here when ready.

When you say you'll have to collab with someone, I can offer some testing for you. Here are some initial notes from testing.

Good:

HDR works in EEVEE and Cycles, both in the viewport and when viewing the render results in the image editor. The Material Preview also has working HDR.

The OpenGL backend opens without crashing (in previous iterations this was an issue) and the HDR setting is hidden (as expected).

Looking at SDR images imported from disc in the image editor appears to be fine.

Neutral:

HDR only provides HDR high brightness benefits when using the "Standard" and "RAW" view transforms. I suspect this is expected since the other transforms probably clamp the outputs to the 0-1 range.

"Exposure", "Gamma", "Looks" (E.G. Contrast looks) and "Colour Management Curves" work with HDR on. Sadly it appears the "colour management curves" is limited to the 0-1 range. However, values outside the 0-1 range are still affected by the curve (I suspect by a simple continuation of the curve outside the 0-1 range).

Bad:

View TransformtoFilmic.View TransformtoStandard. The Material Preview will still be using theFilmicview transform.Filmicview transform that doesn't seem to have any benefits from HDR.Standardview transform, and you will now see the HDR benefits.Gammasetting in the colour management settings to 0. The scene will be black.I think we have to clarify somehow that this only affects display and not file output. For that reason I would suggest to but this setting in a Display subpanel of the Color Management panel.

Users will still probably wonder how to save files with such HDR colors, but I don't think adding support for that is a blocker.

There's still one thing I've been a bit confused about with regard to HDR, and this pull request continues to add to the confusion.

The brightness of some HDR content is impacted by the SDR brightness setting in macOS. Some content is impacted by this (E.G. Blender with HDR, HLG HDR videos imported into Davinci Resolve) while others aren't (E.G. A HDR video on YouTube). I have attached some images below to show this in Blender.

From my understanding, the SDR brightness setting shouldn't impact HDR content, hence why I'm reporting it here. But I could be wrong.

Is this "issue" (SDR brightness impacting HDR brightness) just a difference between how EDR and HDR content is handled? For example, is Blender using EDR while HDR videos on YouTube are using HDR, hence why they behave differently? If this is the cause, does EDR align with the plans the Blender foundation has for the future of HDR on other platforms like Windows? Do other platforms have features similar to EDR? Is EDR just a stop gap until other changes are made to properly support HDR?

Note: The 100-1200 preset has a SDR brightness of 100 nits and a HDR brightness of 1200 nits.

The 500-1200 preset has a SDR brightness of 500 nits and a HDR brightness of 1200 nits.

Note 2: The images found above are from a DLSR camera with the same ISO, Aperture, and Shutter speed in both images, so there's no "automatic brightness" controls from the camera interfering with the images.

Note 3: The clipping seen in the 500-1200 preset is visible in real life. From my understanding the content produced by Blender is exceeding the 1200 nits the display profile specifies as it's maximum, so the content just gets clipped at 1200 nits.

@Alaska see #105714 for more information.

This PR disables clamping allowing display of extended color ranges above 1.0 as explained in the description of the toggle and PR. It doesn't alter other behaviors which you're expecting.

EDR isn't our final target, but an intermediate step (even temporary), currently only allowing limited workflows. The workflows you're referring to doesn't fit the workflows that would be enabled by this change. So there might be confusion as expectation what this change is about are different?

I'm not sure what you mean exactly by this not being the final target. We do want to additionally support displaying wider gamuts, but that's largely orthogonal to this. As far as displaying colors above 1.0 is concerned, it's not clear what the next step beyond this would be.

Maybe the Apple developers could clarify what the expected behavior is in macOS. And if this is not balancing SDR and HDR following the guidelines, what would need to be done to make it do that.

There are no concrete timelines, but similar functionality can be added for other platforms at some point.

I would not consider this a stop gap. As far as I know, if you want to display sRGB HDR content, this is the way to do it.

See https://developer.apple.com/documentation/metal/hdr_content/displaying_hdr_content_in_a_metal_layer#3335505

I am no expert on this topic and look it from code/API point of view. So I could be wrong or limited here as well. Depending on the choices we made concerning "Possibly, render the whole Blender UI in wide enough color space (rec2020?). The final display color space transform is then either done by the OS, or as a window-wide shader." We might need a different surface. From maintenance point of view we might want to settle on a small set of surface configurations that covers all platforms (Backend/GPU/OS). Anyhow I don't think this is important to settle at this time and as part of this task.

Edit: I've removed a large section as it's basically the same as Jeroen's comment above. I did not see the comment before posting.

After reading Apples documentation on HDR content (see Jereon's comment above), it seems the behavior I observed is normal for EDR. It's just different from how HDR standards like HDR10 operates and thus caused some confusion for me.

Sorry if I caused any confusion.

From this link I understand that EDR is the way to display HDR content on macOS, not an intermediate step? Not sure if there is anything in particular you wanted me to get out of that paragraph.

Edit: I guess it's about scaling brightness along with SDR content, which to me was already a given because we can't do anything but give Metal one buffer that contains both SDR and HDR content. At least regarding how the API works.

Yes, we want to draw the entire Blender UI and viewports in a wide gamut color space. When we do this, it will still be up to us how bright we make the UI vs. the viewport and images. It all goes into one buffer and things are blended together with overlays, so that relative scaling seems like it's something we have to do on the Blender side.

What I'm wondering if the correct behavior would be to get some SDR vs. HDR scaling factor from the operating system and use it. Not saying this has to be solved now, just would be good to know.

The brightness control of the Mac display is intended to impact colours in the 0..1 range. As the SDR range consumes a percentage of the display's actual maximum brightness, the HDR headroom (values greater than 1) is effectively the rest.

So if user the monitor brightness setting is set to 50% of the display's capabilities, then the headroom for HDR will be the other 50%. Effectively this allows colours in the range of 0..2 to be displayed, and anything above this is squashed.

If the monitor brightness for SDR is set to 20% however, then the headroom is then the other 80%.

Effectively this allows the colours in the range of 0..10 to be displayed with anything above that being squashed.

The functionality this PR exposes provides the above workflow, and is aligned with what applications such as Affinity Photo does when displaying an HDR image. A possible future improvement would be to add a checkbox somewhere that allows colours that are being clamped to the maximum to be displayed as such. Affinity Photo's visualisation of this is to use black for such colours, which clearly shows where blown highlights are being clamped. Coupled with an exposure control, this is a powerful combination that allows a wider range of colour to be interpreted and understood on the display.

Thanks for the clarification. The main issue I have with this work flow is that it doesn't line up with HDR content consumption.

For example, with this pull request, Blender will show the

0-1range between the0 and max SDR brightnessrange of the display. Everything above 1 gets shown in the left over brightness range of the monitor (as you pointed out). As you adjust the max SDR brightness, you adjust how the entire image is displayed.With HDR content (E.G. HDR10 videos), the 0-1 range is assigned a brightness range (E.G. 0-100 nits) and adjusting the SDR brightness will have no impact on the HDR content. It will always display the 0-1 range in the 0-100 nits range of the display. (HDR10 content doesn't use 0-1, it uses something different, but I hope you get the idea)

This may not be a concern at all since the target of EDR may not be to master HDR content, instead it may be to provide a HDR experience to Blender users. (As I believe Jereon pointed out in a previous comment)

And of course, this isn't much of a concern now, and instead will become more important when Blender can easily output to HDR video formats. Because ideally users will want the preview seen in Blender to match the file output by Blender, and it seems like EDR (in it's current form) can't do that.

On the topic of matching the preview seen in Blender with the file output by Blender. This is probably work for a different pull request, but having an option to see your render in the image editor and/or viewport with the output colour management settings instead of the general colour management settings would be useful. Especially considering that view transforms like

Standardneed to be used to benefit from EDR/HDR when ideally you'd want to be exporting with view transforms likeFilmic.I'm sorry I'm basically repeating points. I'll stop now.

Internally Blender will be working with very high dynamic range data and then has to convert it to sRGB SDR in order to be able to show it on a monitor. Filmic view transform is designed to compress that high range into something that fits into limited brightness of SDR in an aesthetically pleasing way, mostly by darkening the shadows and compressing highlights. Since this curve is exactly designed for SDR, it would be less than ideal to apply it to HDR directly, as it would result in overly bright image that would lack the pop and realism in the highlights that HDR is famous for. A different tone mapping curve should be implemented for HDR instead, one which is similar to it, but a milder version of it. This is how Unreal Engine does it.

This! What Alaska is saying here is very important. It's ok for OS SDR brightness slider to affect the brightness of UI, but it should never affect the brightness of content, at least not by default. HDR is defined and standardized in nits (cd/m2) which are physical units of brightness. Those are then compressed via PQ curve for efficiency (same thing as gamma compression) then uncompressed back to nits. So if you tell a reference HDR display to show 100 nits, it should show exactly that much otherwise it's inaccurate and deviating from the standard.

It's fine for TVs, inadequate monitors, video players, etc to deviate here for various purposes such as attempting to tone map the image to be darker to preserve highlight details they otherwise couldn't show, but for production apps such as Blender, Davinci Resolve, Premiere, Adobe Camera Raw, Affinity Photo, Krita, etc... accurately adhering to standards is extremely important as it makes collaboration possible when working in teams of people. Any creator needs to be able to see what his audience is supposed to be seeing, in order to create without bias. I own a colorimeter in order to ensure the brightness, contrast and colors of my HDR monitor are at reference values, for the purpose of content production.

In SDR, if something is supposed to be 50% bright then a program should send 8 bit equivalent of a decimal value 0.5 to the monitor, which is 128/255.

In HDR, if something is supposed to be 50 nits, then a program should send a 10 bit equivalent of a decimal PQ value of 0.440282 to the monitor, which is 450/1023.

Anything other than that is incorrect. And it's totally fine for users to be able and to chose to send multiplied signal values to their monitors, but such behaviour should never be a default behaviour or accidental.

In other words, if a person works in a software that scales HDR brightness along with OS SDR brightness scale, and he cranks up their SDR brightness from 100 to 300 nits to compensate for bright viewing environment, then they are accidentally misusing the standard in a way that will hurt their teamwork and HDR content distribution, as when they send their content over, that content will be seen by others as 3 times darker than that person expected and wanted the content to look. Instead, that person should have used their monitor OSD controls to make their monitor brighter than reference, to compensate for their viewing environment (and ideally shouldn't have tried to do HDR production outside of dimly lit environment).

The intended way to produce HDR content is to work in a dimly lit environment and have monitor that displays nits specified by input signal as actual physical nits, no more, no less. This provides an anchor for a standard of brightness. HDR content should be made to feel pleasantly bright with such a setup. We want an internet where HDR content has consistent brightness levels. We don't want one piece of content to be overly dark only for the next one to flashbang us because we had to adjust our monitor brightness to compensate. And the only way to achieve this is for nits to strictly stay nits in production pipelines, and for brightness adjustments to be done in the monitor if they must be done, rather than in the production software. Sending inaccurate HDR brightness signals in software is only acceptable for content consumption software, never for production software such as Blender.

It's worth noting that these are important matters, since the way Blender implements this will matter in the grand scheme of the world transitioning to HDR. And HDR shouldn't be thought of just as a cool feature to have, as it is here to fully replace SDR, as SDR suffers from being a severely outdated standard, that is literally defined against and with respect to limitations and physical characteristics of phosphorus in CRT screens. It's worth allocating significant resources towards doing this right, and I can't wait for Blender HDR support to arrive to Windows.

For clarification, all of these comments are certainly incredibly useful and relevant for the long-term enablement of HDR workflows. This is something that will require precise attention and system+application level color management coordination to achieve technical HDR accuracy.

However, as discussed above, the initial scope of this patch is to take the first steps required to enable HDR content overall, which starts with allowing for the display of an extended colour range, if the connected display supports its display.

The small initial steps here are simply ensuring that the associated framebuffer formats can store the required precision for HDR content, and that the color transform shaders do not arbitrarily clamp this range anyway.

This patch also covers the opt-in support for Apple macOS platforms, as this implementation is required within window management (GHOST module), but the wider changes would also work on other platforms with a few small changes to the window/swapchain initialisation code.

Once this is in place, then I hope this can serve as a base which can be built upon for full HDR workflow support in future.

The discussion around this is great - particularly with how authoring HDR content can be done accurately within Blender. For the Mac side, there are other applications out there doing this already, and I hope precedents are being set about how to do this effectively. This includes existing experiences about how display brightness interacts with UI elements of MacOS or Apps, along with the HDR content within a sub window. Blender's UI confining itself to 0..1 is the right thing to do here. To Brecht's point, the output from Blender is just a bunch of floating point values. Part of Blender's role in HDR content authoring (ignoring exporting for now) is to output the pixels to the OS with enough resolution and description to be correctly displayed as the user wants it.

This part is where the OS, the connected display(s) and the user takes ownership to ensure their setup is doing what they expect it to be doing for their purpose. MacOS supports display presets, there are Apple and non-Apple displays created for HDR editing and colour grading, and HDR TVs themselves which can be connected to a Mac. The brightness of these panels can be configured to make 1.0 in an output buffer mean 100 nits. That calibration is outside of Blender.

This PR enables Blender to achieve the first step, which is pass through the colours to the OS with the appropriate resolution, which when coupled with the correct HW setup, can enable the described HDR workflow.

But of course the task is not finished nor limited to just this, and embracing more HDR tooling/awareness within Blender around colour spaces, gamuts, and the transforms between them is going to be the next steps.

@ -291,2 +291,3 @@const bool use_predivide,const bool use_overlay)const bool use_overlay,const bool use_extended)use_extended -> use_hdr I think is more clear, since that's the name of the option. EDR I guess is a macOS specific term.

@ -76,6 +78,9 @@ class RENDER_PT_color_management(RenderButtonsPanel, Panel):col.prop(view, "exposure")col.prop(view, "gamma")if gpu.capabilities.hdr_support_get():It's a bit of a hack as we can't automatically check for this in general, but I think graying it out for Filmic will help avoid some confusion:

@ -698,2 +679,2 @@MEM_freeN(rect_byte);/*Use floating point texture for material preview if High Dynamic Range enabled. */In main it now always draws a float buffer, so this change can be removed.

@ -405,6 +405,7 @@ void MTLBackend::capabilities_init(MTLContext *ctx)GCaps.compute_shader_support = true;GCaps.shader_storage_buffer_objects_support = true;GCaps.shader_draw_parameters_support = true;GCaps.hdr_viewport_support = true;Is it possible to check if the monitor supports HDR?

Thanks @brecht working through your feedback now, hopefully we can work towards getting the first prototype of this in soon.

Regarding whether it is possible to check the monitor support, I believe we should be able to add a dynamic parameter to GHOST_DisplayManager* for this, although I'd be conscious whether this would throw up potential issues if the setup changes after initialisation.

i.e. a HDR-compatible display is plugged in after the fact.

I believe the parameter

[NSScreen maximumPotentialExtendedDynamicRangeColorComponentValue]should be able to tell you if a display can support EDR/HDR up-front.https://developer.apple.com/documentation/appkit/nsscreen/3180381-maximumpotentialextendeddynamicr

Perhaps it would make sense to add a supports_hdr to GHOST_DisplaySetting and use this data for the active display?

Does Blender currently do any context re-initialisation upon switching the window between displays?

I was thinking about this mostly for the purpose of graying out the setting in the user interface. For that there could be

bool GHOST_HasHDRDisplay(), returning a cached value that is updated on certain events from the operating system.Not sure it's worth doing that as an optimization to allocate a smaller buffer when HDR is not used. We don't reinitialize the context when moving the window between displays.

Yeah, this makes sense, will look into getting something put together, in this case, perhaps it makes sense to keep the buffer allocation static using the GPU capability

GCaps.hdr_viewport_supportto demonstrate the potential, and then haveGHOST_HasHDRDisplay()be dynamic based on actual availability to override the UI/feature toggle.This should mean all workflows are supported, and dynamic changes to connected displays do not affect behaviour.

Yes, that seems fine.

@brecht On reflection after looking into this, I'm not sure if this makes sense to change. Similarly, I'm also unsure if greying out the HDR toggle should be done either. There are two main thoughts which jump to mind:

i.e. there are potentially multiple dimensions to consider, bit-depth and EDR, which are both means of displaying higher precision content, but not necessarily both present at one time.

Perhaps the key issue is in communicating what the actual toggle button should be. As it stands, given HDR is enabled anyway if supported by the backend, the button acts as a visualisation toggle for over-brightness.

As per other apps such as Affinity photo, my suggestion for this initial implementation may simply be to reflect the switch as an "EDR" toggle on macOS ("Enable Extended Dynamic Range"), which implies allowing an extended range, which is more in-line with what is happening.

Then as the HDR workflow project as a whole evolves, and moves to other platforms, then perhaps this can be improved once ideas for how the implementation should be handled evolve.

In most of these cases, it feels the best solution is to keep the representation in the application as simple as possible, while allowing the app to output the higher-precision formats and configure their own displays accordingly.

If we can't reliably or easily determine if the monitor support HDR/EDR, then I'm fine not graying out the HDR option for that reason. It's just a bit nicer but not that important.

I can see that for Filmic it could make a difference, but we also do dithering by default which negates that in practice. I think the fact that the button does nothing at all for Filmic is the bigger UI issue that users will struggle with (in fact developers here have gotten confused wondering why it didn't work). What do you think about adding a text label explaining this and not graying out. For example just:

I also still suggest to put the HDR option in a "Display" subpanel of "Color Management" as suggested before.

I prefer the name HDR over EDR, even if there are some side benefits with extended precision. I would rather not introduce the term EDR for Blender users.

Okay thanks for the feedback, I think it should be okay to keep the current patch status with the greying out for Filmic in this case, so that it better represents user expectations.

Greying out may be still be reasonable along side this. A note likely would be useful to highlight that not all transforms will have a visual change, especially if they are internally remapping a range between 0.0 and 1.0. I can tag this note onto the description just to help set user expectations.

In this case, I think the last bit to do is your final suggestion here, I can add a display sub-panel and update the PR.

This makes sense, I imagine the implementation will evolve over time as HDR workflows are implemented across the board as part of the longer-term effort.

Thanks!

@ -1278,0 +1293,4 @@RNA_def_property_ui_text(prop,"High Dynamic Range","Enable high dynamic range with extended colorspace in viewport, ""uncapping display brightness for rendered content");Can we extend this tooltip with some more info?

Just a few changes for me but this seems to be in landing shape then.

@ -173,0 +173,4 @@vec4 clamped_col = min(col, 1.0);if (!parameters.use_extended) {/* if we're not using an extended colour space, clamp the color 0..1 */Comment style: Capital + fullstop.

@ -430,3 +430,3 @@size_y,true,GPU_RGBA8,GPU_RGBA16F,This is not needed. This function will be removed either way since it is unused.

@ -464,9 +466,11 @@ static void gpu_viewport_draw_colormanaged(GPUViewport *viewport,GPU_batch_program_set_imm_shader(batch);}else {Blank line

@ -1317,2 +1352,3 @@GPUOffScreen *offscreen = GPU_offscreen_create(r_size[0], r_size[1], false, GPU_RGBA8, GPU_TEXTURE_USAGE_SHADER_READ, nullptr);r_size[0], r_size[1], false, desired_format, GPU_TEXTURE_USAGE_SHADER_READ, nullptr);Does reading byte from float texture supported?

This particular function (

WM_window_pixels_read_from_offscreen) will read the framebuffer.If not, change the

GPU_offscreen_read_colorbelow.Yes this should work in the Metal backend as the read routine will ensure the data is read into the target color channel data format. Though I will double check that this is not causing any side-effects.

@ -74,6 +74,7 @@#include "BIF_glutil.h"#include "GPU_capabilities.h"Uneeded change

Will clean up last bits from Clement, and also still have to address the HDR monitor availability idea from Brecht, but hopefully that should be the last of it for the initial implementation. Thanks all for your input and feedback!

Just confirming, this patch is for EDR, and EDR can be used on non-HDR displays right? (E.G. With EDR enabled on some Apple SDR displays), decreasing the brightness of the display just decreases the real world brightness of 1, allowing values greater than 1 to be "natively" displayed).

So will support be for HDR displays or EDR capable displays?

This work will require an HDR display (which all EDR capable displays are). SDR displays will simply clamp output colours to 1.0

@blender-bot build

Thanks for the contribution. I've added release notes here:

https://wiki.blender.org/wiki/Reference/Release_Notes/4.0/Rendering#Color_Management

This change introduced some color shift, which I do not believe is intentional. Reported there #111260.

I would strongly encourage to drop the notion of “realism”, and focus on the artifacts of pictures. Pictures, as @Alaska has rightfully pointed out, are unique artifacts that cover a specific closed domain range.

To flip this around to a very real example, if we go out and shoot chemical film and print it, the picture is the final printed film. We could trivially “augment”, subject to creative guidance, that picture for selling TVs using EDR / HDR.

A key point is that the colourimetry of the closed domain measured signal of the film print is known and constant under a specific assumption of intensity. If we wanted to encode it for EDR / HDR, we’d need to know the bounded and fixed destination.

This mechanic currently floats on macOS, which can work for an OS, but as @Alaska rightfully pointed out, cannot work reasonably for authoring work.

Unreal should not be considered a baseline standard of useful here. For all intents and purposes it’s a broken mess.

Other than that, I agree that the floating macOS concept here is going to be seriously problematic to author picture formation chains for. I’m not even sure how to negotiate it because leaving the OS to clip or not clip and use some variable vendor secret sauce of “mapping” is always going to be inadequate.

Ultimately Blender needs full control of that EDR range, and know what it is in the ST.2084 case.

I am incredibly sceptical that there is a clear path forward here given that the macOS implementation floats with, as @Eagleshadow has pointed out, the display brightness.

Except this isn’t a whole picture of the surface of the problem. We are authoring pictures in a constrained situation. Imagine trying to experience a picture where the upper end is floating? The whole point of Filmic and AgX is to form an acceptable picture, and a good deal of experimentation and effort has gone into this. To suggest that some arbitrary nonsense EXR colourimetry is routed directly to the display to enable what amounts to a sales pitch, misses the totality of the point of creating work. It is deferring the handling to the operating system, and utterly devoid of a century’s worth of research into what goes into a picture.

This is nonsense. Show me an application “doing this already” and I’ll show you a broken picture that no one will find acceptable.

Folks gravely overestimate things.

TL;DR:

We need to figure out how to approach this floating operating system level construct from the authorial vantage. It’s non-trivial. In the case of ST.2084, we’d need to know the absolute units, which would probably involve some system hook as I believe macOS will constantly float the values above 1.0. For HLG, I suspect a simple method of somehow determining an anchored middle grey would suffice, as the curve is designed to more or less gracefully handle the upper end, assuming the picture is previously formed.

The “above 1.0” system works well for developers without an idea of encoding details or the nuances around pictures. It doesn’t work here.

@Sergey Might you be able to start a discussion topic on this? I’m happy to prepare some encodings derived from the AgX mechanic that are known to work, so that we can tackle this issue?

You might be looking for this: #105714

Also, I may be misunderstanding this, but I believe macOS EDR support in Blender is a simple "Let's give you something that's takes advantage of the HDR displays to look at", not an attempt to implement the tools required for a HDR mastering workflow into Blender. But I could be wrong.

In my limited testing, Davincii Resolve does this on macOS. Specifically I was working footage shot in HLG. From testing I found a video player that would display HLG in a "HDR" way. The original video looked alright in that video player and showed HDR like properties. Importing the video into Davincii Resolve, changing some colour management settings, then exporting, it looked basically the same as the original video, same HDR like properties. But while in Davincii Resolve the preview was heavily distorted (brighter) unless the SDR brightness of the display was set to 100 nits, then it looked basically the same as the imported original video.

To reframe this a little bit, with the current support implementation for macOS, EDR is a component of it. There are two main enablements which give the user a lot of control, despite what may come across as "OS Magic"

The app opts into EDR. EDR specifically is a technology which can utilise over-brightness of the display to extend the available colour range, beyond what a display would normally be able to show. Yes it is possible to control EDR using specific API on macOS, however, this is only relevant for some display modes.

The app now correctly tells the OS which colorspace data is being outputted in, and the range is now extended to allow for higher precision.

Even with these few changes, how that data is then displayed is highly configurable. i.e. you are able to go into the colour mode presets in macOS settings and change how the contents are actually displayed and what range they are mapped to:

The "EDR floating" mechanism would only be usable in an EDR-compatible preset e.g.

Apple XDR Display P3, beyond this, the HDR enablement is just providing the OS with additional colour data, replacing sRGB with ExtendedsRGB. The HDR enablement patch still enables use of HDR displays, separate to EDR in Apple displays, as ultimately the colour information to enable this is there.This topic can get incredibly murky and complex, but it feels like it could be boiled down to simply replacing the default colour space blender renders in, e.g. Rec2020 instead of sRGB was discussed, then so long as the OS/display manager is aware of what colour data to expect, the transform is handled automatically.

For example, a "static" HDR setup with a HDR-enabled monitor is still enabled with this feature, but as sRGB is a narrow colour space, you cannot properly leverage a full workflow just yet:

So TL;DR, there isn't secret sauce as such, you can still have displays with configured color profiles and static brightness, it's still up to the user to configure their display how they want.

This is not a pragmatic and useful case.

When the encoding range floats, the picture formation is handed off to the OS.

Literally no HDR codecs are floating range, they are all closed domain, 0-100%.

Which is why various “master” thresholds exist for the somewhat ridiculous ST.2084 encoding.

In the end, this patch opens up a system architecture approach, whereby 1.0 “means something” to the OS. But in terms of how pictures work, this can never be a useful mechanic, as the way pictures are engineered is to be in control of the total range, and appropriately control the colourimetry along the whole range.

In the macOS case, this becomes incredibly impossible; the range floats on the high side.

So now, if we engineer a picture formation, the target domain floats up and down. And the OS then is responsible for the handling of values that exceed this upper domain values. As we can see already, the handling is worse than garbage.

The only way I can see to make this work is to somehow fix that upper bound, and properly report it to the DCC. Then it is feasible to go to something like an HLG encoding, or ST.2084.

I have already attempted to solve this problem on macOS, and I remain flummoxed. While I commend the idea of a fixed “SDR” value at 1.0, which is great for developers who don’t wish to concern themselves with the “management” aspect, the architecture becomes incredibly complex for folks that do need to concern themselves with the matters.

The only other option would be to flip to a known and fixed mode without this floating upper bound mechanic.

From @Alaska

I couldn’t agree more. HLG is a much more reasonable approach to selling TVs. It also is by far the most sound for adding this to a content system, and I’ve proposed something that amounts to an HLG based approach in another thread here.

It’s the only option that I can estimate to make sense under this specific set of contexts that isn’t too brittle, and works well enough, while also bringing enough backwards compatibility to make integration easier.

If I'm understanding correctly, @troy_s wants to make a HDR compatible view transform, but the current Blender HDR implementation is missing some things to make that work well in practice. That's true, as this PR is only a first step. It would be nice to take the following steps too, but please be aware that there is currently no concrete roadmap for this. It's definitely not going to happen for 4.0.

I wrote down some notes on what could be the next concrete steps in #105714, but again, I would not expect this to happen soon.